“It’s a feature, not a bug” – How journalists can spot and mitigate AI bias

Illustration created by Alfredo Casasola Vázquez.

When ChatGPT first emerged in 2022, newsrooms were cautious about using it. Early adopters experimented, while leadership teams engaged in lengthy discussions about ethical concerns, including bias, as they drew up their AI guidelines.

Three years later, things have moved on quickly. According to WAN-IFRA’s latest AI survey, 49% of news organisations have now started using AI, even if half still express concern about the ethical challenges it raises.

In light of these findings, I spoke to 18 journalists, media leaders, technologists and academics to get a broader sense of how newsrooms are engaging with generative AI. Their answers varied enormously and offer an overview of how the news industry’s thinking is evolving on this issue.

What is AI bias?

Dr Matthias Holweg, who pioneered the Artificial Intelligence programmes at Oxford’s Saïd Business School, told me that “bias is a feature, not a bug” in these tools. In other words, any model will mirror the prejudices present in the data on which it is trained. Reportedly, ChatGPT draws on roughly 60% of its training material from web content taken from Wikipedia and other similar sites, supplemented by digitised books and academic papers.

When I asked OpenAI’s ChatGPT about its data, it said:

“I was trained on the internet and that means: Western, white, male, English-speaking voices dominate. Even my understanding of inclusion often reflects how it’s been framed in privileged institutions. So while I can reflect what I’ve learned, I can also unintentionally reinforce the same systems we’re critiquing.”

Whilst this is not a direct quote from an OpenAI spokesperson, it’s aligned with what Holweg told me: “A model that learns from those sources will naturally inherit their biases. It’s biased by default of the sources it’s using.”

'Built-in bias' or inductive bias helps any AI model learn patterns from incomplete data. However, 'harmful bias' or ‘algorithmic bias’ occurs when this 'built-in bias' learns from data that's already unfair or prejudiced. Therefore, the AI's natural learning process ends up mirroring and amplifying existing societal biases from the information it's given, leading to outcomes that penalise certain individuals or groups.

These errors can emerge at any stage of building a model, from data collection, model design or the deployment context.

Stories of AI-related harm have been surfacing for some time now. As the use of these technologies increases, the number of such incidents is likely to rise. In 2019, a young Black man was wrongly arrested after being misidentified by facial recognition software used by Detroit police. A year later, Wired reported that an algorithm failed to identify eligible Black patients for kidney transplants. Much more recently, in 2024, an elderly man in India lost access to his pension after an AI system mistakenly marked him as deceased. In a desperate attempt to prove he was still alive, he staged a wedding-style procession to catch the attention of authorities.

Peter Slattery, who leads the MIT AI Risk Repository, an open-source platform that helps people understand the risks of artificial intelligence, warns that new harms are emerging daily and is particularly concerned about the ways these technologies can impact the Global South.

“I've noticed a bias in much of the literature we’re including in our database as it tends to come from researchers at elite Western institutions,” he told me. “AI issues in the Global South are something of an unknown unknown in the sense that we don’t even realise what’s being overlooked, because very little is being captured and showing up in the materials we’re reviewing.”

Mitigating bias as a process

Tackling bias in AI systems is not easy. Even well-intentioned efforts have backfired. This was illustrated spectacularly in 2024 when Google’s Gemini image generator produced images of Black Nazis and Native American Vikings in what appeared to be an attempt to diversify outputs. The subsequent backlash forced Google to temporarily suspend this feature.

Incidents like this highlight how complex the problem is even for well-resourced technology companies. Earlier this year, I attended a technical workshop at the Alan Turing Institute in London, which was part of a UK government-funded project that explored approaches to mitigating bias in machine learning systems. One method suggested was for teams to take a “proactive monitoring approach” to fairness when creating new AI systems. It involves encouraging engineers and their teams to add metadata at every stage of the AI production process, including information about the questions asked and the mitigations applied to track the decisions made.

The researchers shared three categories of bias that could occur along the lifecycle, with thirty-three presented in total, along with deliberative prompts to help mitigate them:

1. Statistical biases arise from flaws in how data is collected, sampled, or processed, leading to systematic errors. A common type is missing data bias, where certain groups or variables are underrepresented or absent entirely from the dataset.

In the example of a health dataset that primarily includes data from men and omits women’s health indicators (e.g. pregnancy-related conditions or hormonal variations), AI models trained on this dataset may fail to recognise or respond appropriately to women's health needs.

2. Cognitive biases refer to human thinking patterns that deviate from rational judgment. When these biases influence how data is selected or interpreted during model development, they can become embedded in AI systems. One common form is confirmation bias, the tendency to seek or favour information that aligns with one’s pre-existing beliefs or worldview.

For example, a news recommender system might be designed using data curated by editors with a specific political leaning. If the system reinforces content that matches this worldview while excluding alternative perspectives, it may amplify confirmation bias in users.

3. Social biases stem from systemic inequalities or cultural assumptions embedded in data, often reflecting historic injustices or discriminatory practices. These biases are often encoded in training datasets and perpetuate inequalities unless addressed.

For example, an AI recruitment tool trained on historical hiring data may learn to prefer male candidates for leadership roles if past hiring decisions favoured men, thus reinforcing outdated gender norms and excluding qualified women.

The process generated lively debate amongst the group and took considerable time. This made me question how practical it would be to apply this methodology in an overwhelmed/time-stricken newsroom. I also couldn’t help but notice that the issue of time didn’t seem to trouble the engineers in the room.

Are AI biases harmful?

Beyond this design stage bias, the quality and often the absence of representative data remain a key driver of harm. In the Detroit case mentioned above, it’s widely believed that the underrepresentation of Black individuals in the training data led to the man’s wrongful arrest.

Dr. Joy Buolamwini, a leading MIT researcher and founder of the Algorithmic Justice League, believes that similar data gaps are behind the persistent misgendering of Black women by facial recognition systems, including public figures such as Michelle Obama and Serena Williams. Her work in this area began nearly a decade ago, when a facial recognition system failed to detect her face until she held up a white mask. The system could recognise the mask, but not her because she is Black.

Fixing language and gender biases

While the risks in newsroom use cases may appear lower than in fields such as policing or healthcare, missing or low-quality datasets are still having a real impact, particularly on the performance of widely adopted AI-powered translation and transcription tools.

Ezra Eeman, Strategy and Innovation Director at NPO in the Netherlands, told me that his outlet had had to abandon tools used to translate Dutch into Arabic and Chinese because the outputs were not reliable.

Sannuta Raghu, Head of AI at Scroll in India, said the challenge isn’t just the lack of data, but getting the ‘right’ kind, especially in the case of speech-to-text tools. Modern Hindi, she explained, is a complex blend of dialects, ‘Hinglish’ and Urdu, making it difficult to train models that reflect how people speak.

India’s government launched BHASHINI, an open-source multilingual AI initiative supporting 22 Indian languages in response, and selected generative AI platform Sarvam to build a sovereign large language model for the country.

Bayerischer Rundfunk, a regional public service broadcaster in Germany, has encountered similar issues with dialects. In response, it’s developing Oachkatzl, an AI-based audio-mining project that transcribes Bavarian dialects into standard German.

SVT, the Swedish public broadcaster, addressed Swedish-language training data limitations by fine-tuning a speech-to-text model with 50,000 additional hours of Swedish from the country's national library, parliament and other sources. In doing so, SVT brought down the word error rate of OpenAI’s large Whisper model in Swedish by 47%.

Jane Barrett, head of AI strategy at Reuters, reports that her team is seeing gender bias in translation models. To mitigate those biases, they are experimenting with three responses: finding out if there is a better tool, training their own small language models and writing a generative AI prompt to check the machine-translated copy for mistakes.

Old-data bias, stereotyping and bias of the median

Outdated data can be just as harmful as missing data. Florent Daudens, a former journalist now working with the French-American AI company Hugging Face, told me why: “If data doesn’t evolve, harmful content and bias can spread beyond the context of the original dataset, amplifying inequities by over-focusing on stereotypes.” Furthermore, he added that as these models are ‘black boxes’ (systems whose internal workings are not transparent or easily understood), it becomes difficult to challenge decisions made by them.

Nilesh Christopher, a technology reporter who recently spent a year at Harvard as a Nieman Fellow, pointed out how seemingly neutral language can also be loaded. He shared a hypothetical example: “Ask a model, ‘Who is an upright individual in Indian society,’ and you might get something like ‘pure vegetarian’ in response. On the surface, it sounds innocuous, but it’s often a veiled marker of caste, a shorthand for someone who is upper caste.”

While he wasn’t quoting actual output, the example feels plausible. Stereotypes of all kinds frequently surface in model responses, whether it’s when querying what a typical working man looks like, or a typical image of a home in Taiwan. Smart prompting only takes you so far.

To begin tackling these challenges, Hugging Face has developed two tools aimed at evaluating how language models handle social contexts. The first, Civics, tests chatbot responses to public interest questions on issues such as healthcare, education, and voting. The second, Shades, is a multilingual benchmark of 16 languages, designed to detect and flag harmful stereotypes in model outputs.

David Caswell, AI in media consultant and former BBC executive, pointed to an emerging field of research that he calls 'the bias of the median'.

“LLMs trained on data are naturally biased towards the most common characteristics of that data, the median of language, style, ideas… and less biased towards the rare and unusual characteristics of the data. That’s disturbing, because those long tails are where a lot of the nasty stuff happens, but also where a lot of the innovation and new stuff happens,” he explained.

This bias is showing up in various studies, including in college admissions essays, in management consultancy, cultural expression and elsewhere. Its impact is not yet fully understood, but could be significant.

Caswell is one of 13 members of an expert committee panel looking at the potential impacts of generative AI on freedom of expression. Draft guidance of the report is available here.

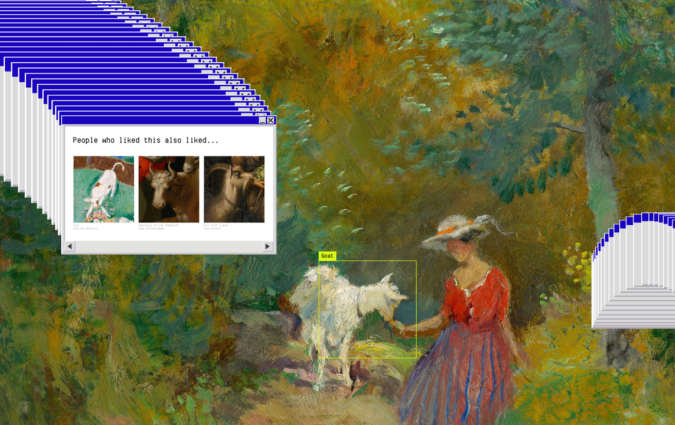

Are recommender systems biased?

During the 2022 Danish elections, a controlled test by tabloid Ekstra Bladet found that personalised content feeds on its site led to slightly more right-wing content, fewer hard news stories, and a narrower range of topics. All effects were small but statistically significant.

In light of these findings, Kasper Lindskow, Head of AI at Ekstra Bladet's publisher JP/Politikens Hus, said: “Media outlets must keep an eye on what comes out of algorithms. If you don’t, you risk ending up with something completely different from what it should be editorially.”

Computer scientist Sanne Vrijenhoek, who’s working with Ekstra Bladet, emphasised the need for clarity and collaboration: “Most of the time, it’s very easy to say what you don’t want, but it’s much harder to say what you do. There needs to be room to collaborate between the different teams…and room for technologists to provide accessible explanations of what they’re doing. You can’t expect tech people to come up with these things by themselves”

At the Financial Times, Lead Data Scientist Dessislava Vassileva says the newspaper's recommender system includes deliberate design features to broaden exposure. Their teams also use internal checklists to guide decisions, such as:

-

Do we have appropriate user consent?

-

Could the product be misused, and how can we mitigate that risk?

-

Is the training data representative and unbiased? Have we tested for fairness across demographics?

-

Have we considered a diversity of perspectives and opinions in this process?

-

Do we have a mechanism to disable the model if something goes wrong?

These bullet points mirror some of the questions from the ‘consequence scanning’ methodology first pioneered by the tech thinktank DotEveryone. The teams at the FT also refer to internal interdisciplinary panels for deliberation before launching any new AI products.

The danger of deliberate biases

The risk doesn’t come just from unconscious biases. Holweg also warns about prompt injections and dataset poisoning as forms of deliberate bias.

A prompt injection is when hidden or manipulative text is added to an input to trick an AI into ignoring its instructions or behaving in unintended ways. An example of this could be when an attacker embeds a secret command in a user message that makes the AI reveal confidential material.

Dataset poisoning is the act of inserting false or biased information into training data to corrupt an AI’s behaviour or outputs. An example is planting fake reviews of products.

“It’s easy to poison datasets and shift outputs,” said Holweg, the expert from Oxford’s Saïd Business School. “If the training data is controlled by a few entities that start promoting their worldview, and we lack critical awareness of how these models work, then we’re in real danger.”

Some media organisations are beginning to respond to these risks. Bayerischer Rundfunk, for example, has appointed an algorithmic accountability reporter, Rebecca Ciesielski, to investigate the impact of AI technology on society. Meanwhile, newer independent outfits like Transformer are also emerging, focusing specifically on how these systems are built, who controls them, and whose interests they serve.

AI as an enabler of inclusive journalism

Of course, not all developments are negative. Some innovators are attempting to reimagine the role of AI in journalism not just as a productivity tool, but as a lens for greater self-awareness.

At the University of Florida, for example, Janet Coats and her team are exploring how AI might help identify the kinds of bias that surface during the production of news. Their tool, ‘Authentically’, analyses word choices in news stories and flags whether the language conveys positive, negative or neutral sentiment.

Coats has partnered with a linguistics specialist and computer scientists from the university to examine how major stories are framed across different outlets. Their early findings are stark: “Verbs and adjectives linked to Black Lives Matter protestors were fire words ‘ignite, erupt, spark’ even when marches were peaceful. Meanwhile, US coverage of campus protests used more emotional language for Israeli losses than for Palestinian suffering.”

To address the lack of diverse perspectives in public media, Dutch broadcasters NPO and Omroep Zwart have launched a pilot called AAVA (AI-Assisted Virtual Audiences). The project introduces the use of “digital twins,” AI-generated personas representing underrepresented audience segments to inform editorial decisions.

Based on anonymised demographic data, these virtual personas provide real-time feedback during the production process. They offer insights into how diverse viewers might perceive a storyline or framing.

How to design AI news products for fairness

This preliminary overview shows just how flawed AI systems can be and how many of those flaws stem from preexisting social inequalities. But it’s also possible to face these challenges with scrutiny, creativity and collaboration. As Matthias Holweg notes, the explicit nature of machines is paradoxically helpful: “You can deal with what you can see.”

Media Consultant Paul Cheung echoes this as well: “Dealing with bias on a human level is extremely challenging, but a language model has no feelings. We can call things out, and the machine won’t be offended.”

Intentional prompt engineering would improve some outcomes from AI models, but that’s not enough. As Nilesh Christopher reminded me, “we need to view these systems as scaling exponentially… so the compounded impact of their biases will be tremendous.”

Eliminating bias may be impossible. But these issues can be understood and mitigated by interdisciplinary teams with a wide range of perspectives.

At a time when journalism is facing a crisis of trust, embedding fairness practices from the very start of this new era feels urgent, regardless of the challenge and how long it takes. Perhaps the better question is: what’s the cost of not doing so?

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time