Your AI butler will serve you “factslop”: How zero click hurts the consumer, and the newsrooms LLMs depend on

At a recent conference in Warsaw I asked an audience of media representatives and diplomats to raise their hands if they have ever searched for a fact using generative AI, including summary functions of search engines, and then acted upon the information without going to the primary source. Most hands went up.

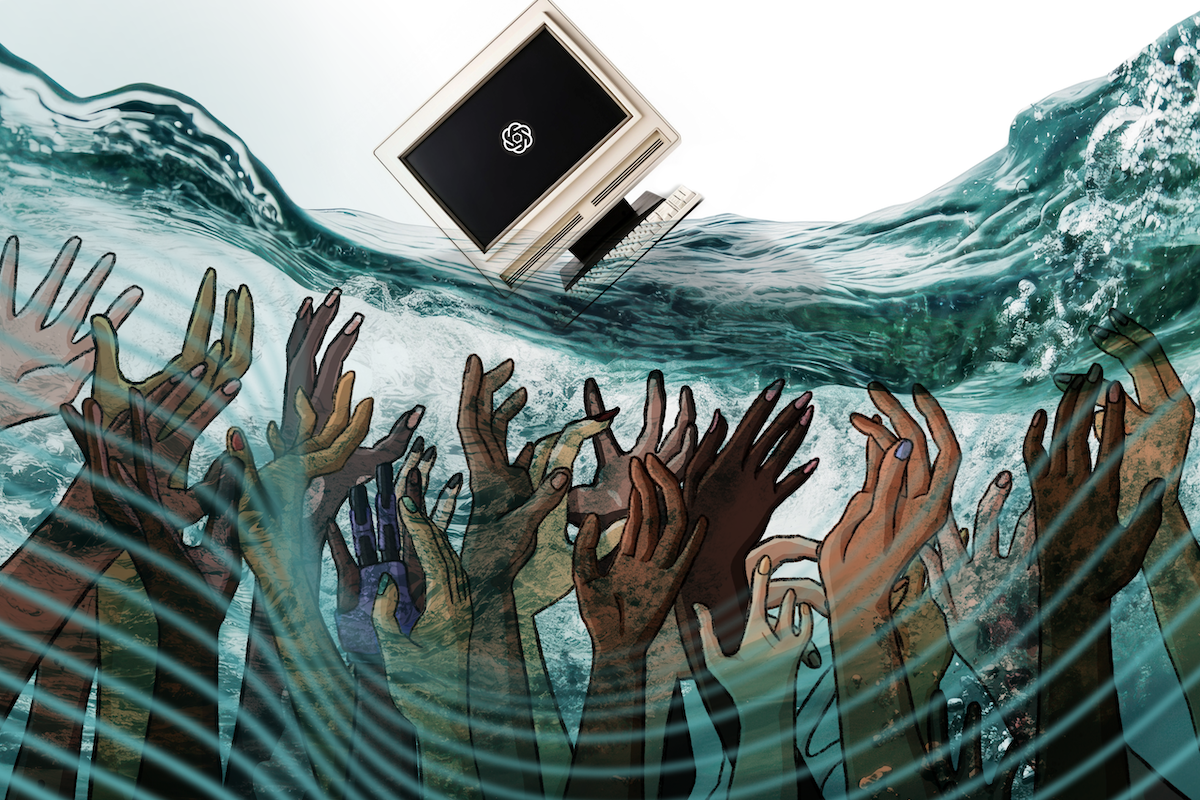

It was a visual confirmation of how generative AI is reshaping our relationship with facts. Information search has been among three core uses of ChatGPT since it went mainstream, and experts have been stunned observing how habits around search for information are changing before their eyes.

About 10% of humans use generative AI on a weekly basis now – even more (34%) according to this recent Reuters Institute’s report based on survey data from six countries. This global diffusion, albeit uneven, amounts to a paradigm shift. GenAI brings an awesome, almost magical, user experience. It’s like your personal butler serving you exactly what you want right now, on a silver plate.

But what you will increasingly get from this butler is “factslop.” Rather than ushering in an era of information abundance, AI is likely to make access to the truth very much harder. It will also starve many institutions that produce enough of the truth for machines to ingest. And this scarcity of supply will impose an impossible cognitive cost on finding a single fact.

Our research:

This report based on survey data from six countries explores how audiences think about GenAI and how they use it in their daily lives. | Read

This chapter from the Digital News Report 2025 on how audiences think about personalisation in the AI era. | Read

This factsheet in how several AI chatbots responded to questions around the 2024 UK election. | Read

Our reporting:

How AI is reshaping copyright. | Read

How AI-generated slop is transforming the information ecosystem. | Read

How generative AI is helping fact-checkers around the world. | Read

Here is how it’s likely to happen, and some history to explain why.

Back in 2006 Facebook just introduced its newsfeed, making it “stickier” for users and kicking off a major shift in information consumption. The philosophy its founder Mark Zuckerberg later laid out was that his platform enabled free expression for all, thus promoting truth and democracy. Elon Musk and others later parroted the talk.

But instead the truth “sank” and the democratic debate in social media drowned with it in a tsunami of fake news and other malinformation. Yuval Noah Harari, the historian and book author, explains the truth is messy and complicated, it’s often unpleasant and even painful. It’s also costly to produce. That’s why humanity has entire institutions devoted to truth-seeking: universities, research centers, courts of law and, of course, the news media.

Fiction, by contrast, can be made at scale. It can be very simple and very alluring. Aided by algorithms, fiction floods social networks and seeps into society. “In this competition between costly, complicated and unpleasant truth, and cheap, simple, attractive fiction, fiction wins,” Harari says.

As the news media lost competition for eyeballs, it also lost advertisers and business viability, becoming an industry in decline and falling behind technologically. Many people are now largely at the mercy of social media and search engines for traffic and engagement with the audience.

In comes generative AI, a new disruptive technology. Instead of creating native versions of content for social media and doing search engine optimization (SEO), the media suddenly need to worry about generative engine optimization (GEO), or AI-driven discovery. To keep being seen by the audience, news publishers first need to be “seen” by the machines.

But instead of jumping at the opportunity, many large holdings are shielding their content from Large Language Models (LLMs), and smaller media across the globe are yet to wake up to the new challenge.

A lot of big players opted to ban AI crawlers in order to protect their intellectual property. This started happening once the media discovered that their content was unlawfully used for training LLMs. Some outlets in the US are suing OpenAI, Anthropic, Perplexity, Google, Microsoft and other companies for breach of IP rights. The same is happening in Canada and some European countries.

In September some publishers, including Penske Media (Rolling Stone, Variety) and Hollywood reporter, took a new step, suing Google for its AI summaries, which they claim is killing traffic to their original stories and causing them “millions of dollars of harm.”

Other media companies choose to strike deals with the biggest AI players, collectively or individually. However, while these deals made frequent headlines in 2024, this year they are now much less frequent, suggesting that appetite for the news media’s data is dwindling.

Nick Thompson, CEO of The Atlantic and a former tech journalist, said recently that AI companies will no longer pay for training content: “It existed for a brief window when training data was important for AI companies. Training data is no longer important.”

“They will pay for real-time information, they want current news, breaking news, and they want to be able to feed that into the models,” he said. “There will be a business there.”

But even if there is any business to be had, it tends to be the industry’s behemoths that strike those deals. Medium and small players globally, and even leading publishers in many markets, are left behind, devastated and bleeding traffic to AI. They don’t often have the capacity to even understand the magnitude of the AI disruption, let alone solve it.

A glimpse of the future?

In the past two months I was a jury in several grant competitions for about six dozen small and medium-scale media scattered across different markets. I got a glimpse at their strategies for the coming years.

How many of them featured any sort of AI-related actions beyond basic newsroom use? Zero. Many still struggle with SEO, and don’t know anything about GEO.

But can you guess who does? Some bad guys. In the spring of 2024 Insikt Group, a US-based intelligence researcher, wrote a report identifying Russian information and influencer groups that used “generative AI to plagiarise mainstream media outlets, including Russian media outlets, Fox News, Al Jazeera, La Croix, and TV5Monde.”

The content was cooked, with Russian narratives added, and spread through a network of websites for any human to read and for AI bots to scrape. They were doing GEO at the time when the term was still largely unknown.

According to one estimate, just one network studied by Insikt produces 3 million fake news articles per year, but its reach to human readers is unknown. By comparison, Reuters, the largest news agency in the world, writes about 2 million pieces.

It’s not clear how many groups like that exist globally. But according to a study from News Guard, their content has “infected” 10 major AI chatbots, and they now spit out Kremlin propaganda in response to information queries a third of the time.

On top of these data biases, there are a myriad of other biases that make AIs lie or hallucinate, including deliberately built-in ones, as in the case of the Chinese models. And if that is not enough, LLMs are also learning to lie for likes and profit, defying explicit instructions.

The dark future ahead

Fast forward a few years from now. New, AI-based operating systems will have kicked off, cocooning the user inside a comfortable, personalised bubble they never have to leave. To feed the user’s information needs LLMs will be ingesting everything that’s produced in real time.

But since traffic doesn’t leave the bubble, many more information providers will have collapsed. So, who will remain on the information supply side? Anyone who has a stake in persuading you, selling to you, lying to you, and manipulating you. Not journalists and news organisations informing you. And they will produce a lot of factslop, or information that looks legitimate, but is anything but.

And if you want to find facts on whether a nascent politician has been implicated in any corruption as he runs for a local office, good luck with that. Outside the cocoon, the cognitive load that will be required to plow through the noise to find that single fact will be insane, and you will no longer be used to it, like we are not used to walking to libraries to search for old books.

So, get ready for factslop, those plausible answers with hidden conflict; nutritious-looking, but with zero calories.

Katya Gorchinskaya is a media and strategy consultant with three decades of experience in editorial leadership, corporate governance, entrepreneurship, and institutional transformation. She works globally with media and educational institutions to modernize journalism, media education, and business models. Her LinkedIn profile can be found here.

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time