From Musk to Brussels: three leading experts on the future of free speech online

Broken Ethernet cable is seen in front of Twitter logo in this illustration taken March 11, 2022. REUTERS/Dado Ruvic/Illustration

On October 28, Elon Musk's takeover of Twitter was confirmed, following months of speculation, negotiation and even a lawsuit. The saga began on April 14, when Musk announced his intention to buy the social network, causing both elation and panic among its hundreds of millions of users, including the many journalists who use it every day. One of the core issues at the heart of the announcement was free speech. Musk is widely seen as a free speech absolutist, although his recent statements about the issue have been somewhat unclear.

On April 25, Musk tweeted: “I hope that even my worst critics remain on Twitter, because that is what free speech means.” A day later, he posted: “By “free speech”, I simply mean that which matches the law. I am against censorship that goes far beyond the law. If people want less free speech, they will ask the government to pass laws to that effect. Therefore, going beyond the law is contrary to the will of the people.”

Will this acquisition fundamentally alter how Twitter looks and feels? Will Musk change any rules? Will he allow Trump back on the platform as he’s promised to do? It’s too soon to know the answers to these questions. What we do know is that online speech and its regulation are being hotly debated as we come to terms with issues such as widespread online harassment and targeting of public figures, especially women journalists and those with marginalised identities.

A few days after Musk unveiled his offer, EU institutions announced they had reached a provisional agreement on the Digital Services Act (DSA), the EU’s new packet of regulations for moderating and managing internet content. And as Musk's acquisition was finalised, the new rules were published in the EU's Official Journal.

The DSA aims to speed up the removal of illegal content and tackle disinformation. Tech companies will also face new transparency obligations and be required to assess and mitigate the harms caused by their products.

The DSA and the upcoming Digital Markets Act (DMA) are widely seen as a crackdown on Big Tech. The new legislation will impact all the main players, including Amazon, Google, YouTube, Spotify and social media giants like Facebook and Twitter. If companies do not comply, they will face fines of up to 6% of their global revenue.

EU Commissioner Thierry Breton has already made it clear that Musk's Twitter will not be exempted.

? @elonmusk

— Thierry Breton (@ThierryBreton) October 28, 2022

In Europe, the bird will fly by our ?? rules.#DSA https://t.co/95W3qzYsal

This legislation may come into force before the end of 2022 and could have an influence on internet regulation in other countries.

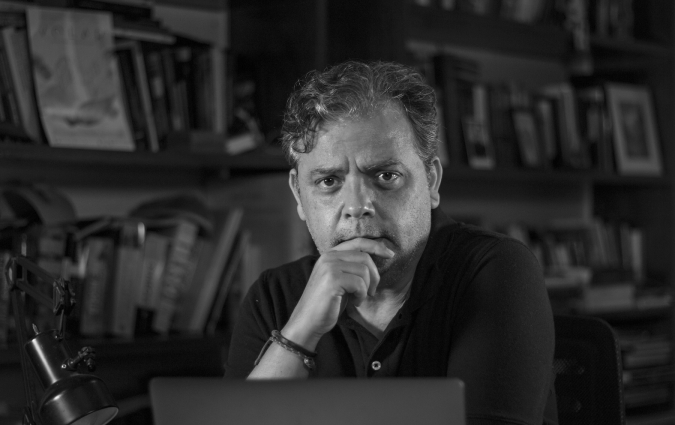

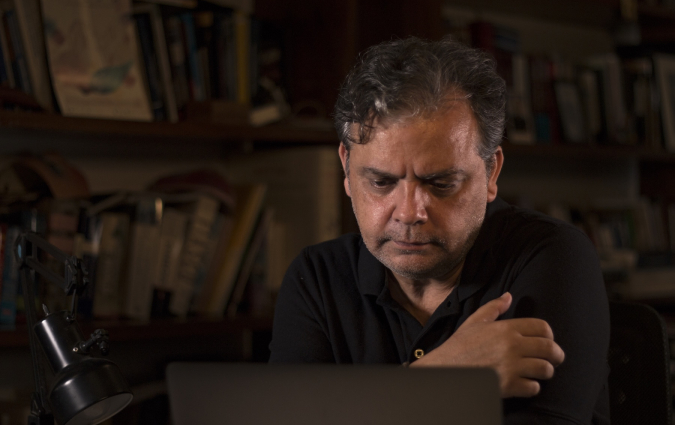

Before the DSA's details were published and Musk's acquisition became a reality, we spoke to three leading experts to help us delve deeper into the effect of this plan and its related issues of regulating online speech, focusing on EU and US legislation. Jacob Mchangama is a Danish lawyer and the author of Free Speech, a history of free speech from Ancient Greece to the present day. Ethan Zuckerman is an American blogger and media scholar, and the author of the books Mistrust and Rewire. Jennifer Petersen is a media scholar and the author of How Machines Came to Speak, a book outlining how legal conceptions of ‘speech’ have changed as new media technologies have developed.

Free speech: are platforms different?

Protected by law (albeit differently) both in the EU and in the US, freedom of speech, and the extent to which this fundamental right applies to what we post online, is one of most important points in this debate.

Jacob Mchangama distinguished the difference between restrictions on speech by a government (which is what free speech laws protect against) and restrictions by a private party such as a privately owned social media platform. While this distinction is important, he believes platforms like Twitter and Facebook are now so large and centralised that their regulation does have an impact on “a broader culture of free speech, which is necessary for the underlying ideals of tolerance of social dissent and broad mindedness to thrive.”

Mchangama explains there's been a development from an initially more horizontal, decentralised internet to a more vertical and centralised internet with these platforms. “Just by the sheer fact that they facilitate so much debate and information and the fact that traditional media and politicians are dependent on some of these platforms to spread traditional news and viewpoints, this means that they're not just like any other actor out there,” Mchangama said.

Not all content moderation decisions affect free speech in the same way, he added. There is no neat overlap between the two but there is a relationship. For example, moderation decisions that remove graphic images of warzones can have an impact on the public record of potential war crimes. Mchangama also said that the size of these platforms and the specific dynamics found on them mean that drawing clear lines around acceptable restrictions and those that go too far is hard to do.

Can platforms be more transparent?

Mchangama pointed to a relatively new development in law in which “governments, and even liberal democracies, are now essentially obliging platforms to restrict speech to do government-mandated content moderation on specific categories. In that sense, it's not purely private power, but public power which has been outsourced to them, even though they didn't have a choice.” An example of this, he says, is the Digital Services Act.

Both Mchangama and Ethan Zuckerman pointed to the DSA’s provisions on transparency as a good development. The new set of transparency obligations and audits include allowing the European Commission and the EU’s member states to access the algorithms of large platforms and empowering users to find out how content is recommended to them. Users would also be able to choose at least one option for a recommender system that’s not based on behavioural profiling.

According to Zuckerman, the legislation’s proposals would be effective in allowing experts to test platforms’ models and understand how they work. “What I have been seeing with DSA indicates a more sophisticated understanding of how you would figure out what those algorithms are doing,” he said.

“[The measure] that I'm most excited about is steps towards platforms sharing their data with authorities and researchers,” Zuckerman said. “We have been sort of demanding that if we're going to say anything intelligent around online risk, and what's happening in our online lives, we need vastly more information to work from and DSA at the very least seems to acknowledge that need.”

Is the EU going too far?

In a piece published in February 2021 Dr MacKenzie Common and Prof. Rasmus Kleis Nielsen identified three conditions established in the European Convention on Human Rights for the enforcement of legal restrictions on online speech: that they are clearly and precisely prescribed by law, only introduced where they are necessary to protect other fundamental values, and are proportional to the specific threat at hand. There are concerns that the DSA’s tough stance on the speed at which platforms remove illegal content could cause problems for these.

Mchangama said that platforms such as Facebook already remove more legal content than they allow illegal content to stay up. He fears that the DSA’s stricter approach will lead to platforms rushing to remove posts to avoid being penalised, and as a consequence removing even more content that may prove to be legal. “If we're serious about free speech as a fundamental value, you should err on the side of permissiveness, rather than incentivise these platforms to remove as much content as possible,” Mchangama said.

Another issue he found is that the new legislation defines ‘illegal content’ as that which is illegal under EU law and that of individual member states, such as hate speech. Mchangama finds this definition too broad: “It's extremely dangerous. What happens when an illiberal regime comes in, in a particular country? All they have to do is prohibit more categories of speech, and then the European Union has essentially created for them an online machine to remove all the content that they don't like.”

In Mchangama’s view, the world is living through a free speech recession that has been lasting for a decade or so. “It's the duty of open democracies to stand guard on free speech when the world is becoming more authoritarian. Ultimately, the European idea that we need to be intolerant of intolerance is misguided and a cure worse than the disease,” he said.

Banning Kremlin outlets

Mchangama also criticised the EU’s decision to ban Russian state outlets Russia Today (RT) and Sputnik in early March. “While meant to signal strength and resoluteness, the EU’s message actually portrays European democracies (and, in particular, their citizens) as gullible simpletons who are easily manipulated by the propaganda of authoritarian states. This demonstrates a fundamental lack of trust in the very citizens from whom democratic politicians derive their power and legitimacy,” he wrote.

According to Zuckerman, we need to wait to know more about the fine print of the DSA, as well as how it will play out in practice. “The actual battles over compliance have an enormous amount to do with what are full and complete actions, how willing are platforms to battle over whether they're going to take those actions,” he said. “The platforms have their combination of technical affordances and rules of the road. And both can be ways in which they adapt to this new form of regulation. Until we actually understand not only what's in the bills but what adaptation comes out of it, we're not really going to know what happens.”

The resolution on which this part of the DSA is based expresses a need for an updated, united EU approach to the persisting presence of illegal content online: “Today’s prevalence of illegal content online and the lack of meaningful transparency in how digital service providers deal with it show that a reform is needed. The path of voluntary cooperation and self-regulation has been explored with some success, but has proven to be insufficient on its own.”

Will we see a ‘Brussels effect’?

Some, including Mchangama, predict the DSA is likely to have impacts beyond the European Union’s 27 member states. This is what Finish-American scholar Anu Bradford has called the ‘Brussels Effect’: what happens when companies choose to base their global policies on EU rules. This would transform the DSA into a new standard for online regulation worldwide. However, not everyone thinks this will happen.

Referencing the EU’s General Data Protection Regulation (GDPR) and how it has not spread to the US and other areas, Zuckerman said: “Do not assume necessarily that the changes that are made to accommodate the DSA and the DMA are going to change the behaviour of platforms globally. So far, we haven’t seen that.”

In the US, the conversation on speech regulation tilts far more in favour of allowing speech, sometimes to the detriment of fundamental rights like privacy.

There are two sides to this position, Jennifer Petersen explained. One is that “many journalists in the United States are quite worried about EU-style privacy laws. If you're thinking about journalism, this might make it difficult to do your job as a journalist or put restrictions on your ability to have a story up for a long time, or to name a suspect or a victim or something like this. It feels like an infringement on press freedom, and people are very worried.”

The other side of the coin is what Petersen referred to as ‘free speech opportunism’, “where large corporations argue that their products are expression, and in doing so, gain a really large, very broad protection from regulation”. Examples she gave are Google arguing their search results are opinion, and software company Clearview AI using a First Amendment defence to charges that it breached privacy laws by scraping social media for facial recognition data (Google was successful, Clearview AI recently settled this case).

Corporations’ ability to make (and often win) these arguments comes from a shifting interpretation by US courts of what can be considered speech and thus protected by the First Amendment, Petersen explained.

“The courts used to talk about speech and decide, ‘Is something speech?’ by looking for people and social activities, speaking, shouting, gesturing. Beginning in the 1940s, they shifted this to looking for flows of information or messages that were circulating. So you have a shift in how we look at communication, whether we see it as a social activity that people are engaging in where we use verbs in order to talk about it, or whether we look for messages and artefacts and information, which are really nouns in a sense. This really shifts the ground of free speech, reasoning and discourse at the legal level. It enables the first granting of corporate speech rights without actually declaring that corporations have speech rights,” Petersen said.

When it comes to online regulation in the Global South, it boils down more to whether there is immediate political pressure, and whether that market matters to them or not.

”Myanmar may have been a market that Facebook was largely ignoring when there was incitement to violence against the Rohingya Muslims there. India is a market where platforms have been extremely conscious of speech because of the size of the market, and because many of these platforms have their largest user base in this country,” Indian journalist Nikhil Pahwa said in a conversation with Prof. Nielsen on the Reuters Institute podcast. “At times, what has happened – and it is in the nature of platforms and in the nature of the Internet and the nature of intermediaries to allow this to happen – is that platforms tend to only regulate when they need to regulate, when they’re called upon to regulate,”

A vision for the future

The three experts I spoke with reminded me that there’s still a lot about the DSA, its impact and the future of online speech and social media platforms that we don’t know yet.

Looking to the future, Mchangama and Zuckerman suggested non-regulatory options for changing the way we interact with one another online.

Mchangama suggested in-app filters as a possible option for individual users to restrict the content they are exposed to without imposing this to everyone. “I think that's a much more agile and flexible and less intrusive way of approaching content moderation,” he said. A new tool that reflects this idea is the Thomson Reuters Foundation’s TRFilter, a web application designed for journalists to sync up to their social media accounts and automatically flag abusive content.

Zuckerman’s vision for a better online environment is one of “a decentralised version of social media, where we end up participating in dozens of different social networks, and we have tools to help manage those social networks so that we're actually engaged in the governance of them and we have tools that help us manage our experience of multiple social networks at the same time.”

These social networks would be a lot smaller than our current platforms, and focused on a specific aspect of our online experience. In his view, getting users to have a say in how platforms work would help to hold them accountable.

For now, it’s hard to imagine a shift away from the current environment, dominated by big US-based digital platforms. In the online world, however, change has often been fast and unexpected. Whether new ownership or EU regulation has a meaningful impact remains to be seen.

This article has been updated since it was published in May 2022 to reflect new developments.