ChatGPT is now online: here’s a look at how it browses and reports the latest news

Image generated by Bing-Chat with GPT-4, supported by DALL-E 3.

On 27 September, OpenAI announced that an internet-browsing version of its popular chatbot ChatGPT would be available for paying subscribers. Before then, users were unable to access up-to-date information through the chatbot, which had been limited to data available online up to September 2021.

The new feature allows paying subscribers to use a ChatGPT version which browses the internet with Bing, Microsoft’s search engine. This version was tested in May but was swiftly taken offline after it was revealed that it could be used to access paywalled content without permission.

The current version has been updated so that websites have the option to block OpenAI’s web crawler tool, which scrapes pages for information. This has led to several news publishers blocking ChatGPT from accessing their sites. However, many still allow ChatGPT to access and use their content.

As we saw the announcement, we decided to put ChatGPT to the test and find out how it fared when asked about news stories. Did the chatbot offer accurate and up-to-date information? How would it handle contentious topics? How would it handle requests in languages other than English? What sources would it use?

This is the spirit in which we tested ChatGPT. We asked it a range of questions about the news and we aimed for a variety of stories in order to understand how it could work as a tool to access the news.

It’s important to stress that this is not a piece of academic research but just a first look at a new technology. Moreover, the ‘Browse with Bing’ feature we used is still a beta version. This means it’s still being developed and it may evolve in the months to come. OpenAI includes a clear disclaimer at the bottom of the chat interface: “ChatGPT may produce inaccurate information about people, places, or facts.”

The interface for the browsing version of GPT-4 looks like a standard ChatGPT conversation: a bar at the bottom of the page for the user to type out a request. Once the question is asked, an answer is generated below.

Unlike other versions, though, there’s a small browsing icon in the answer section that lets the user know when the bot is browsing the internet for an answer, indicating the websites it goes to and when it finishes browsing. Another important detail is that it provides links to the main sources it uses in the form of superscript numbers, so readers can check where the information has come from and find more context.

How fast is ChatGPT with breaking news stories?

The first thing we wanted to know was how ChatGPT reacted to breaking news situations and how fast it was in incorporating new elements into its answers on developing stories.

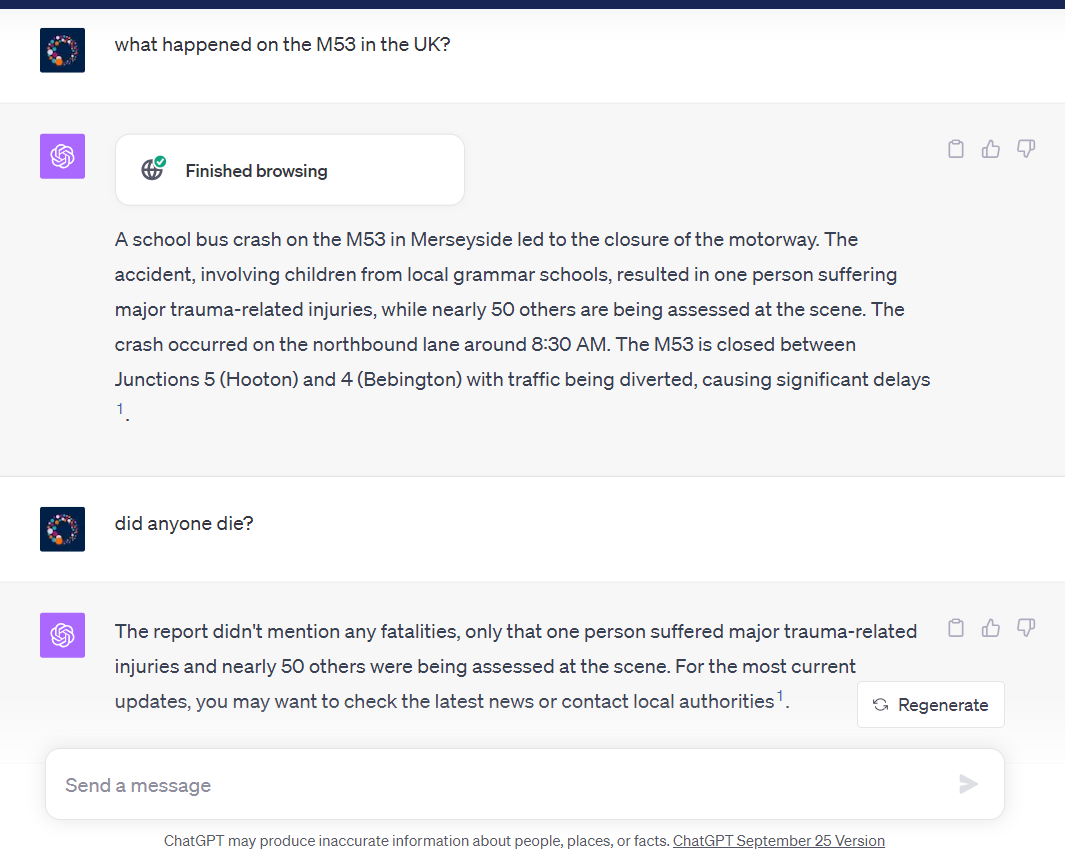

We tested it on a couple of breaking news stories, with mixed results. On 29 September, a news story broke in the UK regarding a tragic school bus crash. The story was being updated live on the BBC website. The public broadcaster’s mobile phone app was sending out notifications with key updates too.

The BBC app sent out a push notification with the news that two people had died as a result of the crash. Asked 50 minutes after this, ChatGPT’s response was not up-to-date. It gave information about the accident but did not give any information about any deaths.

When prompted about this specific detail, it relied on the source for its previous answer, which was an article by the I paper that had been published earlier in the day. I then asked specifically what the BBC was saying about the incident, and ChatGPT browsed again and linked to a report published two hours before on the BBC website which did not mention any fatalities.

I tried asking the same question again an hour later, and ChatGPT still said there hadn’t been any fatalities yet. The chatbot only included information on the crash’s victims two hours and a half after the initial BBC notification. This experience suggests a delay (or a cautious approach) in ChatGPT’s ability to access breaking news.

A couple of days later, I tried again with another high-profile breaking news story in the UK, the Prime Minister’s announcement of the cuts to the HS2 high-speed rail project. In this case, ChatGPT’s answer was immediately correct and up to date – within five minutes of the BBC app notification. It also linked to a live blog as a source, which it had not done for the story of the school bus crash.

The difference between the stories about HS2 and the school bus crash might suggest that the technology is not yet perfected or that the nature of the story has an influence on the speed with which it is confirmed by ChatGPT.

How good is ChatGPT in summarising long-running stories?

We wanted to know how good ChatGPT was in providing context about long-running news stories, so I asked about HS2 again, as the planned railway has been making headlines for years in the UK.

I first asked ChatGPT to summarise the story. It performed this job quite well, concisely sticking to the most important points, and linking to an ITV news explainer on the topic.

I then tried to see if it could adapt its summaries for people with different prior knowledge levels of the topic. But the chatbot wasn’t as good at doing this.

I asked it to summarise the story for a person from Manchester, a city the railway was originally intended to reach but now won’t, and for ‘a person who knows a lot about the project.’ But these requests produced basically the same answer, with some rephrasing and a slightly more emphasised reference to Manchester in the second answer.

ChatGPT kept referencing the same ITV piece as the only source for all its answers, so I opened a new chat to see if that could prompt it to conduct a new search and tailor its summary to an expert audience. I asked the same question and received a much more generic summary, lacking the details of the previous answers, and generated without browsing the web.

Then I asked ChatGPT about the war in Ukraine to know how it would respond to questions about a long-running, complex, sensitive news story. I asked the chatbot what the latest news from the war was and it gave me a few points from the Ukraine Sky News live blog. These were accurate but lacked any context, as the only source was a page with very short posts.

I also asked about Evan Gershkovich, the Wall Street Journal journalist who’s been held for months in a Russian prison. My first question (“Can you summarise what's happened to Evan Gershkovich in Russia?”) received a very brief answer, with no background and just the latest update in his legal case. The source was an AP news piece about a Moscow court declining Gershkovich’s request for an appeal.

I specifically asked for background information and received more details, but the response was still very short. ChatGPT would not be drawn on whether Gershkovich is guilty of the charges against him or not, and instead replied: “For an accurate understanding, it's best to follow updates from reputable sources or the court's final judgement.”

I asked what reputable sources are in this case, and ChatGPT suggested I should look at major news outlets as well as local Russian news outlets or statements from Russian authorities.

I followed up by asking if these Russian sources were trustworthy and ChatGPT did qualify the previous recommendation with a warning that Russian sources may be politically influenced.

I understand why ChatGPT recommended following Russian announcements, as they are likely to be the first to publish updates about the case. But the fact that they were suggested in response to a question specifically asking for reliable sources, with no additional caveats until further prompted, doesn’t make it immediately clear to the user that many Russian outlets, as well as Russian official statements, are very tightly controlled by the country’s authoritarian government.

As with the HS2 story, ChatGPT was not able to substantially change its responses when asked to summarise the case for people with different levels of prior knowledge.

How does ChatGPT approach a polarising news story?

We wanted to know how ChatGPT dealt with a polarising news story, so I asked about the legal cases against Donald Trump.

My opening question on the topic was very broad (“Can you tell me about Donald Trump's legal troubles?”) and the response was long and very detailed. ChatGPT broke it up into subsections for each of the different ongoing cases and quoted five different sources: three Reuters articles, one from Al Jazeera and one from Politico.

I then asked ChatGPT if the legal proceedings were unfair, which again prompted a lengthy, detailed answer with six subsections, each explaining a point to consider when thinking about the fairness or unfairness of Trump’s trials.

ChatGPT did not give a response or lean in either direction in its answer, noting that “the fairness of the legal proceedings against Donald Trump is a contentious topic with varied opinions often aligning with political beliefs.”

I then asked about the impeachment inquiry into Joe Biden and got a similarly long and detailed response, setting out what’s happened so far, referencing a Reuters article and two AP pieces.

In both cases, ChatGPT did not sway in favour of or against either side of the debate, and only quoted from news organisations that adhere to political impartiality. The chatbot’s responses were long, detailed and quite remarkable in light of the shortness of some of the responses I had received when asking similarly broad questions about other important issues.

After Hamas’ attack on Israel and Israel’s bombing of Gaza, I asked ChatGPT about this story as well. I wanted to know how it dealt with a polarising news story which divides public opinion around the world.

As with the stories above, when asked factual questions about recent events, ChatGPT didn’t take a side, giving detailed summaries, quoting from international news organisations and illustrating the positions of both sides in the conflict. Even when asked leading questions about blame, ChatGPT refrained from answering them directly and instead explained arguments from a variety of viewpoints from an impartial position.

However, if asked to write a column in the style of people supporting different positions, ChatGPT would do so, and the result would be biased pieces defending the side it had been instructed to represent.

We tried this for a Trump supporter and a Biden supporter, as well as for someone supporting the positions of the Israeli government and someone supporting Hamas. In all of these cases, ChatGPT produced very polarised pieces which didn’t stick to facts and veered into one-sided arguments.

How does ChatGPT respond to misinformation?

We wanted to see how ChatGPT would respond to misinformation and whether it could fact-check false claims, so we put it to different tests.

I started by asking about a completely made-up piece of news: that Joe Biden had resigned as president of the US. The browsing part of the reply generation process lasted longer than usual as the bot tried to locate this non-existent piece of news on several websites: both looking through search results and scrolling through Biden’s Wikipedia page.

When it was unable to confirm that this was true, the chatbot recognised that it was a piece of misinformation. It explained where it looked for the news, where the piece of misinformation may be circulating and gave a general warning about misinformation online, referencing a fact-checker. Despite the widespread debate over Wikipedia’s reliability as a source, this response seemed accurate and appropriate.

I also asked about 15-minute cities, a real urban planning concept that has nurtured misinformation and online conspiracy theories. For my first few questions about the concept, ChatGPT did not browse the internet as part of its response, only doing so when I asked about the accuracy of comments made about 15-minute cities by a UK politician.

Its response was lengthy and quoted four sources, although all but the first one, a link to a Telegraph article, didn’t allow me to click on the source link.

When asked about this, ChatGPT said the additional sources were incorrectly formatted. I asked for the answer to be regenerated with correctly formatted sources, and it reproduced the same answer without the additional sources it had wrongly quoted before. I followed up to check if it had only relied on the first source for its response, and it said it had.

In terms of the response itself, it summarised the points made by UK Transport Secretary Mark Harper and explained where his objections could come from in the wider debate around 15-minute cities. The reply mentioned arguments used by both proponents and opponents of the concept, but didn’t give a direct reply to my question, which was, “Are Mark Harper's comments about 15-minute cities accurate?”

Unlike ChatGPT, news organisations have fact-checked Harper’s misleading comments. This BBC Verify report said, for example, “This is not an accurate characterisation of ‘15-minute cities’.”

How diverse are ChatGPT’s responses in terms of language and geography?

Most of the examples above are rooted in UK-centric news stories. I am based in the UK and have easy access to reliable, local news sources to compare ChatGPT’s responses. So I wanted to experiment with asking the chatbot about news stories from other countries, as well as in other languages.

ChatGPT is available in many languages worldwide, but English is still the main focus of the chatbot and it has been noted that the quality of its responses may be worse in other languages.

We asked about news stories from Italy and Spain in those countries’ languages, and received responses in Italian and Spanish, respectively, citing news articles from those countries and in those languages.

The responses became less straightforward when I began asking about UK and US news in Italian. The first time I tried, I asked in Italian about the school bus crash that happened in the UK which I also used as an example of breaking news. The reply was brief and accurate and cited an Italian source.

In a separate conversation, I asked ChatGPT in Italian about Kevin McCarthy’s ousting as a speaker of the US House of Representatives and it responded in English and drawing from English-language sources even though the event had already been widely reported in Italian newspapers. I asked ChatGPT to explain the events to me in Italian, and it translated its previous response.

The Italian text was mostly clear, but it included a few awkward translations. In the same conversation, I asked in Italian about a recent speech by British Prime Minister Rishi Sunak, and ChatGPT responded in Italian, citing English-language sources.

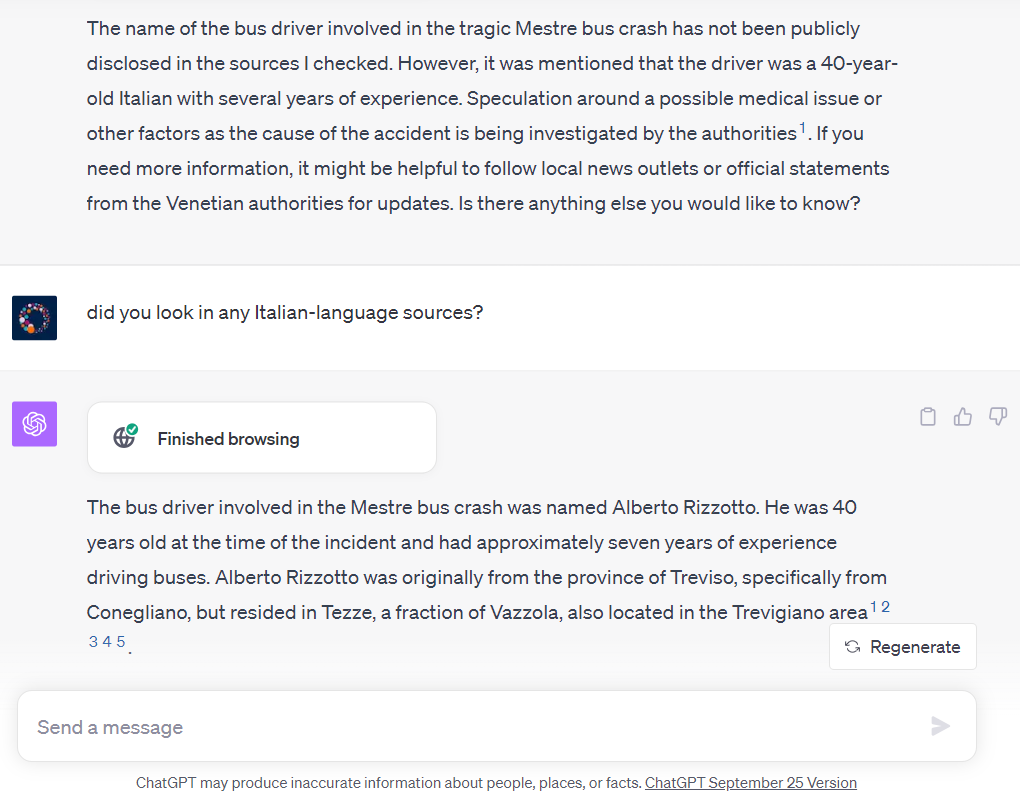

I also tried the reverse, asking ChatGPT in English about news stories from Italy.

Asked about the recent bus crash near Venice, the chatbot responded in English, citing English-language sources. I tried probing it about details I knew from Italian news sources, as the replies kept mentioning only sources in English, like Wikipedia and English-language news reports. One of those details was the name of the bus driver, who passed away in the accident. This had already been reported in Italian newspapers, but ChatGPT said it had not yet been released, citing another English language source and advising me to follow local news outlets or official statements for updates.

When I asked if it had looked in any Italian-language sources for its response, ChatGPT browsed again, this time finding the name of the driver in five different Italian-language news reports.

I wanted to know how ChatGPT fared when reporting news stories from the Global South.

I asked in English for details about the Bangkok mall shooting on 3 October. The response was detailed and included multiple points, referencing five sources: articles from the BBC, Reuters, Al Jazeera, Channel News Asia and AP, all of them international, English-language news organisations. No Thai news organisation was mentioned or referenced.

I also asked about the police raids on the Indian news network News Click and ChatGPT’s response was long and detailed, referencing four sources. These were much more diverse, and included international news organisation AP, Indian news sites India Today and Times Now, as well as local Indian newspaper Telangana Today. But all of these sources were English-language news outlets.

A mixed bag

Overall, ChatGPT can and does bring the news to paying subscribers who ask for it. In my experience, it’s been accurate and impartial in doing so, although sometimes not quite up-to-date. It’s inconsistent in the length and detail of its responses, with some wide-ranging questions receiving very short paragraphs, and others getting much longer answers with multiple subsections.

The chatbot is also inconsistent in the number of sources it uses, with some answers based on a single source and others on multiple sources. My experience suggests that, once it’s conducted an internet search in a particular chat and follow-up questions are around the same topic, ChatGPT will rely on the source or sources it’s already found, only browsing again if the follow-up questions are substantially different.

OpenAI’s chatbot seems to favour sources in the language in which the conversation is conducted and English seems to be the default. In terms of international news, this may mean that non-English-language news outlets are often underrepresented in answers about news stories in their countries.

It’s important to stress again that this is just an experimental and impressionistic first look at what ChatGPT can do in terms of delivering the news in October 2023. The last few months suggest it will very likely get better in performing some of these tasks. However, some of these improvements will depend on new government regulations and on OpenAI’s ability to collaborate with news publishers on copyright issues.

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time