Here are the papers at the Future of Journalism Conference 2025 presented by Reuters Institute researchers

These are the abstracts of the papers that will be presented by Reuters Institute researchers at the Future of Journalism Conference. The annual gathering is in its 10th year and is hosted by Cardiff University's School of Journalism, Media and Culture (JOMEC). The research we present will focus significantly on the impact and use of AI among news audiences and in wider society including its political economy, its role in misinformation and elections and how it can be used for climate news. Read the summaries below.

Who Gets What and Why? Untangling the Emerging Political Economy of AI, News, and Technology Companies

This paper examines how artificial intelligence is fundamentally reshaping power dynamics in the public sphere, focusing on the complex and often competing interests between news organizations, technology companies, and the state. As generative AI technologies are pushed by technology companies, adopted by news organisations, and encouraged and supported by nation states, traditional power structures in the media landscape are being reconfigured, raising questions about the future make-up of the public sphere.

Drawing on primary research including interviews with news organisation executives, AI company personnel, and regulatory authorities, an analysis of industry and mainstream press coverage and observations from workshops and events during 2023-2025 this study investigates how different stakeholders are vying for position in this evolving ecosystem.

The paper analyzes (1) how news organisations are negotiating their diminishing gatekeeping role while technology companies gain greater influence over information distribution through AI systems which increasingly compete with the products developed by the news. It then (2) examines specific cases of power contestation, including disputes over content licensing, data ownership, and content distribution. The paper concludes by proposing a research agenda focused on examining the implications of this development for democratic discourse, market competition, and public access to information.

11 September, 11:00, Room 1

Examining the source effect: Comparative analysis of exposure to climate protest news and public support across the UK, USA, Germany, and France

Waqas Ejaz and Richard Fletcher

The past decade has seen a surge in climate protests, driven by increasing public frustration over inaction in addressing climate change. Groups such as Just Stop Oil in the UK, Letzte Generation in Germany, and Les Soulèvements de la Terre in France have engaged in disruptive direct-action protests, including blocking roads, disrupting major events, and defacing artworks, with the goal of capturing the attention of the news media, policymakers, and the public at large regarding the issue of climate change inaction. However, while these tactics certainly generate media attention, their effectiveness in mobilising public support remains contested, with an ongoing debate over whether they foster engagement or alienate the public (Young & Thomas-Walters, 2024).

Journalism plays a central role in shaping perceptions of protests, often relying on established conventions that frame activism as disorderly or extreme—a process known as the protest paradigm (McLeod & Hertog, 1998). In contrast, media mobilisation theory suggests that mass media exposure enhances political knowledge and engagement (Norris, 2000), potentially increasing awareness and influencing concern over climate change and support for pro-environmental policies.

While research has examined news framing of climate activism, comparative evidence on how public responses to protest news might vary across countries and political contexts remains scarce. To address this gap, we conducted a pre-registered, multi-country survey experiment (N = 4,167) across France, Germany, the UK, and the USA, where participants were randomly assigned to one of three conditions: exposure to media coverage of climate protests, activist messaging on social media, or a control condition.

The study also examines whether political orientation, trust in media, and in activists influence these effects. Findings reveal that exposure to news media coverage significantly decreases support for climate protests, providing evidence for the protest paradigm. However, contrary to expectations from mobilisation theory, exposure to activist messaging did not increase support for climate protests, suggesting that even the direct communication of activist narratives may not be sufficient to counteract negative media framings.

Results also show that the effects of exposure are largely stable across ideological groups and national contexts, challenging assumptions that political orientation strongly conditions public responses. Furthermore, trust in media or activists does not mediate these effects, suggesting that other mechanisms – such as emotional reactions or perceived legitimacy – may be more influential. These findings raise critical questions about the challenges climate movements face in mobilising public support through mass media. While news coverage amplifies awareness of protests, the framing of activism in mainstream reporting may work against their broader goal of pro-climate action. The study contributes to research on media effects, political communication, and climate activism by highlighting the strategic dilemmas protest movements encounter when seeking media visibility.

11 September, 13:30, Room 10

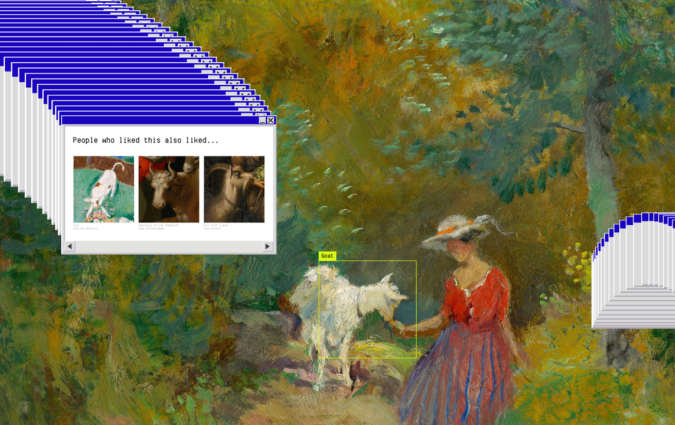

How does GenAI ‘see’ climate change (and back again): Exploring the challenges and opportunities of GenAI for climate visual journalism

Saffron O'Neill, Veronica White, Tristan Cann, Felix Simon, Alastair Johnstone-Hack, Simon Puttock, Sylvia Hayes, Oliver Blewett, Chico Camargo, Travis Coan, Francisco Gonzalez Espinosa, Ranadheer Malla

Visualising climate change is a challenge for journalists. Climate images can be clichéd, disconnected and even divergent from information communicated by other modalities (such as text); and can represent a missed opportunity to engage audiences. Generative AI could potentially provide transformative opportunities to visually illustrate climate news. However, risks may include reinforcing stereotyped, inaccurate or unethical visual content, with knock-on effects on audience trust and public informedness. Working as a transdisciplinary team of social scientists, computer scientists and journalists, we co-produced three experiments designed to represent a spectrum of potential future engagement with generative AI technology. We prompted five generative AI tools (ChatGPT, Stable Diffusion, Midjourney, Microsoft Copilot, Generative AI by Getty Images) with seven typical climate news topics (climate change, flooding, heatwave, migration, Net Zero, COP26, nature) via three prompt styles (simple, detailed, story). These results indicate that there are substantial barriers for generative AI tools to create meaningful images for climate-related stories. We reflect on the implications of these experiments for journalists and others concerned with climate visual communication

11 September, 15.30, Room 5

Don’t Panic (Yet): Assessing the Evidence and Discourse around (Generative) AI and Elections

Felix Simon, Sacha Altay

This paper critically examines the impact of generative artificial intelligence (AI) on elections, focusing on the 2023–2024 global election cycle. Despite concerns about AI enabling more efficient and effective voter targeting and persuasion, as well as more effective misinformation and disinformation, we argue that the influence of AI on election outcomes is – for now – overestimated. We identify several factors behind this overestimation: the inherent challenges of mass persuasion, the difficulty of reaching target audiences in oversaturated media environments, and the limited effectiveness of AI-driven microtargeting in political campaigns. Additionally, the socio-economic, cultural, and personal factors that shape voting behaviour outweigh AI-generated content. We argue that the media played a central role in the skewed discourse on AI’s role in elections, framing it as part of the ongoing ‘cycle of technology panics’. While acknowledging AI’s risks, such as amplifying social inequalities, we argue that focusing on AI may distract from more structural threats to elections and democracy, including voter disenfranchisement and attacks on election integrity. Our paper calls for a recalibration of the narratives around AI and elections and more careful media reporting, proposing a nuanced approach that considers AI within broader socio-political contexts.

12 September, 13:30, Room 9

Between Concern and Epistemic Vigilance: How Audiences React to the Rise of AI-generated misinformation in three countries

Felix Simon, Amy Ross Arguedas, Richard Fletcher, Rasmus Kleis Nielsen

Media and political discourse has been full of dramatic warnings about how generative AI could drive misinformation, even as some scholars have cautioned against exaggerating the risk given what we know about how misinformation tends to function (Simon et al 2023, Simon et al 2024). In this paper, we examine public perceptions of generative AI’s role in the creation and dissemination of misinformation, drawing on qualitative data from 90 semi‐structured interviews and 45 interaction protocols conducted across Mexico, the United States, and the United Kingdom. We focus (1) on people’s perceptions on changes brought about by generative AI with the respect to misinformation and (2) people’s strategies in dealing with the same.

Even as our data suggests many have been receptive to elite cues stressing the potential risks of AI misinformation and are concerned, we also find that most respondents have developed strategies for how they personally want to mitigate against it. Three core themes emerged with respect to the former. First, participants expressed concerns regarding the erosion of trust and credibility in news media, as AI-generated potentially challenges audience’s ability to discern authentic reporting from fabrication. Second, the participants worried about the increasing proliferation of misinformation, noting the ease with which realistic fake images, videos, and texts can be created. Third, respondents highlighted their worry that certain audiences (generally other people, not the respondents themselves)—such as older adults or less technologically savvy individuals—could be especially susceptible to AI-generated misinformation.

In terms of how respondents say they will mitigate against these perceived risks, our data document two main strategies: First, by respondents adapting their epistemic vigilance strategies and second, by calling for greater transparency and oversight. For instance, many participants described actively scrutinising images and videos—checking details, seeking multiple sources, or even reading through comments—to detect signs of manipulation. They stated that they rely on trusted media brands as a safeguard, while expressing concern that individuals less versed in digital literacy (such as older adults) may be more vulnerable. However, in addition to stating that they have become more cautious and methodical in their information consumption, participants see a need for clearer disclosures and regulatory oversight to help everyone navigate the increasingly blurred line between genuine and fabricated content.

12 September, 15.30, Room 1

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time