This AI expert is creating a chatbot to keep you safe

Nikita Roy during a panel discussion at the International Journalism Festival in Perugia. | Credit: Francesco Cuoccio | IJF

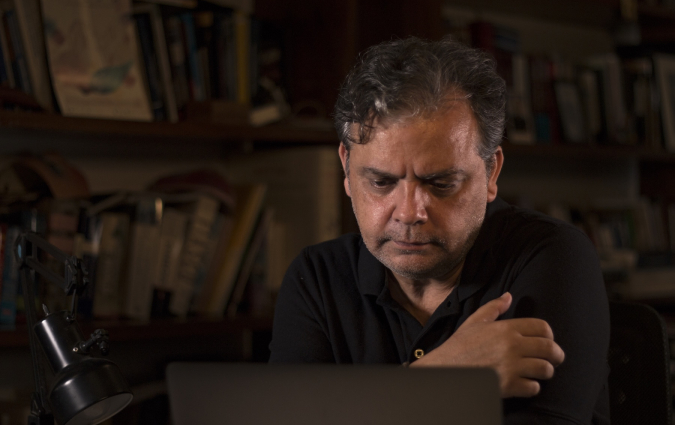

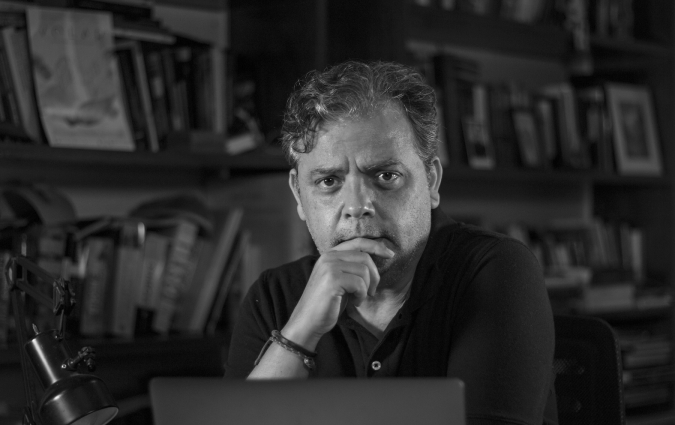

If you’re interested in AI and the future of news, you will have heard of Nikita Roy. This data scientist, journalist and AI expert has been explaining how news organisations are using AI in her popular podcast Newsroom Robots since 2023. Roy is now spending her Knight Fellowship at the International Center for Journalists developing courses to teach AI literacy to other journalists.

During a panel at the International Journalism Festival in Perugia, moderated by Joel Simon, Roy and her co-panellists Elisabet Cantenys, Mike Christie and Gina Chua announced a new collaborative project: JESS (Journalist Expert Safety Support).

JESS will be a chatbot to answer journalists’ questions about safety when working in risky environments. Roy’s Newsroom Robots Lab will work on this initiative with the Craig Newmark Graduate School of Journalism at CUNY and the ACOS Alliance, a global group of newsrooms and media NGOs working together on journalism safety.

I caught up with Roy on the sidelines of the Nordic AI in Media Summit to find out more about the idea behind JESS, how it’s going to work, and how AI can help keep journalists safe.

Q. What is JESS, and how does it work?

A. JESS is an AI safety guide, an assistant to help contextualise safety information for your specific use case. So imagine you are about to go and report on a hurricane in Florida, and you need to quickly make sure, “What are all of the different things that I need to be careful about?” or, “I'm going to go cover a protest. It's my first time going to do that. How do I keep myself safe?”

I can go and query this chatbot. It's trained on all of the safety guides. We are developing it with Mike Christie, who led safety at Reuters. One of the biggest powers of generative AI is the ability to access knowledge and then try to understand contextually how it correlates to you. And that's exactly what we're trying to do.

We're starting just with a chatbot. It will cover multiple different things, all the way from natural disasters to online safety. For example, “I am going to be writing this exposé on a high-profile individual. How do I keep my sources safe? How do I keep myself safe? What are the possible repercussions that could happen?” You’ll be able to get advice in a trusted environment, and that information will be based on documented and trusted safety guidance.

Q. Where did the idea for JESS come from?

A. JESS is the brainchild of Gina Chua, executive editor at Semafor. She built out the safety team at Reuters when she was there, and she created a prototype where she took documents and created a mini chatbot to see if people could ask questions and query safety information.

CUNY built on this project, funded by the Patrick McGovern Foundation, and that's how the entire idea came about – from [Chua and Christie’s] experience leading safety at Reuters and training journalists. As Gina said [during the project’s presentation in Perugia], you do a training once, and then forget about it. When you are in that split-second moment and you have to go and cover a hurricane, you have to try and remember what it was exactly that you were taught in that training. That is one of the biggest pain points we're trying to solve.

We announced the project in Perugia, we are in the development stages, and hopefully we will go live this fall.

Q. For the tool to work, it will need to be trusted by journalists. For that to happen, it will need to be accurate. How will you ensure accuracy?

A. A lot of what impacts trust has to do with design choices. So the key ways in which we are doing that are twofold.

The first one is the interface design, where people can go and quickly reference the sources. So that's not just the chatbot. You could go and read exactly what the safety guidance says, so you can double-check that information yourself.

The second one is that we are heavily trying to reduce the hallucination rates. We are going to be training this. It is not like the entire ChatGPT model that has been trained on everything. We are taking a model and then we are training it only on the specific documents that we have. That tends to reduce the hallucination, because it's now grounded in those data sources. Another technical step that we're taking is having the work of one large language model check the work of another large language model. That is another way in which you can reduce hallucinations.

Q. How will you bridge the trust gap that journalists may have when introduced to this tool?

A. I know firsthand all of the concerns that journalists have regarding AI. For example, they are concerned about the privacy of their data. So we are making the decision of not using any of the proprietary models. We are not using OpenAI or Google. We are using open source models and, on top of that, we’ll host the project on CUNY servers.

Q. And how will you get people to adopt it?

A. I would frame it like this. It’s not always a tool. Sometimes, the question is not, “What can AI do for you?” Sometimes the question is, “What is the job that needs to be done?” When journalists report in risky environments or when editors send their reporters to cover something and want them to be well equipped, that's the job this tool is going to be able to do for them, and that's how we are designing it.

Q. Where does the information provided by JESS come from?

A. We're developing it in-house. It's being developed by Mike Christie, who led and built out the entire safety team at Reuters, in combination with other NGOs and newsrooms’ policy guidelines.

Q. Would JESS be able to provide information on journalism safety worldwide, or are you starting with a more limited scope?

A. We’re starting with the United States and then having an international rollout, planned out for later next year. There is a lot of information, and that's why we are starting with the US. We’ll be testing it with that group, and then rolling it out.

Q. How are you testing the system?

A. We’re still working on the details. That will be happening over the summer. As of right now, we will have newsrooms as part of the testing process, and then see if this tool meet their needs, if there are any other issues we can help with, and if anything has gone wrong.

Q. Once it’s ready, do you see it replacing or complementing the journalist safety trainings we’ve been using until now?

A. This is not a replacement for safety training. Elisabet [Cantenys] from ACOS Alliance has stressed this is an enhancement to your safety training. Once you have your safety training, this is a place where you can go and reference information, and the way we're designing it, it's not going to be publicly accessible to everyone. You'll have to be approved to get access to it.

Q. What are JESS’s limitations? How do you make sure, for example, that the system doesn’t have a bias toward responding even when it doesn’t hold information, as some chatbots tend to do?

A. Those are design choices. If JESS doesn't have that information, it will clearly state that to the user and not make up stuff.

Q. How will newsrooms or individuals be able to access it? Will they be required to pay a fee?

A. This is completely funded by the Patrick McGovern Foundation. As of right now, it's going to be free. For now, the entire focus is on build the product. Let's get this launched and get people using it.

Q. Are there any other ways in which AI can contribute to journalists’ safety?

A. A lot of different ways. It's not just a chatbot. Think about risk assessments, scenarios and planning that you should be doing to help prepare your journalists.

Digital safety is one of the biggest ways in which you can protect your journalists. You can start keeping track of who is facing threats, what the different scenarios are if you're going to produce an article, and what things can go wrong. Those kinds of capacities for AI, especially to think broadly, once you are able to train it on your own vetted set of documents, are going to be highly useful.

Another process I think we don't think about a lot is just the amount of document processing.

Recently I spoke to someone who works with safety at a newsroom, and they were talking about all of the incident reports that they have to file after any safety incident. They were asking me, “How do we make sense of all of that data?” Because those incident reports come, and then they see them. But how can they start making real-time sense of all of those incident reports and see if there's a targeted pattern of things happening across a particular group of journalists, and then start being very proactive as an organisation, instead of reactive?

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time