Our podcast: Digital News Report 2023. Episode 4: Attitudes towards algorithms and their impact on news

In this episode of our Digital News Report 2023 podcast series we explore people’s attitudes towards algorithmic selection of news and the correlation with attitudes towards editorial selection. We explore how people’s self-reported news behaviours and trust in news influence attitudes towards how news is selected, and we look at concerns about missing out on news due to algorithmic selection.

Speakers:

Richard Fletcher is Director of Research at the Reuters Institute. He is primarily interested in global trends in digital news consumption, comparative media research, the use of social media by journalists and news organisations, and more broadly, the relationship between technology and journalism. He is the author of a Digital News Report chapter on attitudes towards algorithmic selection.

Our host Federica Cherubini is Director of Leadership Development at the Reuters Institute. She is an expert in newsroom operations and organisational change, with more than ten years of experience spanning major publishers, research institutes and editorial networks around the world.

The podcast

The transcript

Algorithms' role in news | Attitudes towards algorithmic selection worldwide | Changes over time | Correlation with interest and trust | Concern over personalisation | Lessons for platforms and publishers

Algorithms' role in news ↑

Federica: So just so we start on the same page, can you first explain what an algorithm is and how they are used in news distribution and where?

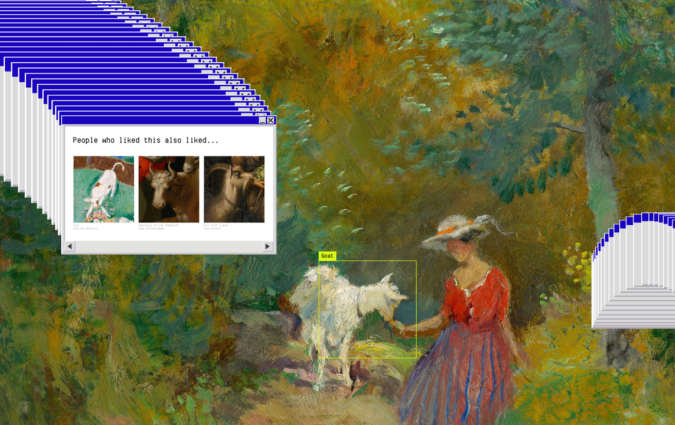

Richard: Well, more broadly, algorithms are used in lots of different ways in lots of different fields. And essentially what they are is a set of instructions normally kind of expressed in the form of code and normally informed by data in some way. So they're a set of instructions to complete a specific task. In the context of news, one of the most important ways the algorithms are used is to make selection decisions about what to show users, for example, on social media feeds, via aggregators and search engines and so on. And I think they're important because it wasn't that long ago that almost all selection decisions were made by journalists, by human beings. But in recent years, more and more news selection is done this way, in part because more and more people are choosing to get news via social media, search engines, etc.

Federica: So, yeah, if we look at today's news environment and the data we have from more broadly, the Digital News Report about how people consume news, why is this question really important at this point?

Richard: I think it's important just because, as I said, these ways in which people get news via social media, search engines, etc. are just becoming more widely used. And over time, questions have sort of grown about whether this is a good way to select news, whether this is a good way forpeople to get news, whether it sort of creates echo chambers, filters, and so on. And I think that's a big part of the problem, is that people are not really aware of the data, they're not really aware of the bubbles, etc. But interestingly, what the public think about these ways of selecting news is sort of being neglected a bit. So we wanted to ask some questions to find out what people think of these ways of getting news and how also they think about it in relation to selection by editors and journalists.

Attitudes towards algorithmic selection worldwide ↑

Federica: So if we start with looking at some of the headline figures, overall, what are audiences' attitudes towards these various ways the news is selected and presented to them?

Richard: So in the Digital News Report survey, we asked a set of three questions across a range of different countries. And we asked people whether they think three different ways, whether they think they're good ways of getting news. So one was, ‘Do you think news selected by editors and journalists is a good way of getting news?’ ‘Do you think that news selected automatically, based on your own past behaviour, is a good way to get news?’ And ‘Do you think that automatic selection based on what your friends have consumed is a good way to get news?’ And what we found is that for all three of these methods, even though they're very different, the sort of scores, or the level of approval was quite low for each of them. So 27% said that they thought that news selected by editors and journalists was a good way to get news. And the equivalent figure for automatic selection based on past behaviour was 30%, and based on friends' behaviour, 19%. So none of these methods have particularly high levels of approval. But what's interesting is that automatic selection based on past behaviour actually comes out slightly ahead of selection by editors and journalists, which may come as a surprise to some people, but that's what we found.

Federica: So it's not that those who dislike selection of news through algorithms instead prefer the judgement of editors?

Richard: No, interestingly, what we found is that in addition to there being kind of low levels of approval for each of these, these three methods, they're actually correlated with one another. So what that means is that if someone says that they think that editors are a good way to select news, it's likely that they'll think that automatic selection is a good way to get news as well, and vice versa. So low approval for one normally means low approval for the other. And I think, again, I think this may be surprising, because we know that human selection and algorithmic selection are very, very different. They function in different ways. So we might expect people to have diverging views, but actually, that's not what we find. And this is one of the reasons, in addition to the fact that approval for all three selection methods is quite low, and the fact that they're correlated, led us to call this generalised scepticism. So the idea is that, firstly, that people are quite sceptical, and secondly, that it's generalised in the sense that people tend to be sceptical of one method of selection, they're sceptical of the others too.

Federica: Of course. You mentioned you've looked at this across a range of different countries. Did any difference between countries come up? I mean, there are country differences. So if we look across all the different selection methods we asked about, depending on the country, the figures range from around 10% approval, all the way up to 40%. It's interesting to note that it never exceeds 50%. So this is all minority approval no matter which country and which selection method we look at. We can see the approval in the UK is among the lowest for all three selection methods. But beyond that, it's difficult to see any kind of clear patterns in the data. So it's not obvious that there's something out there which kind of explains the differences we see across countries. They're quite consistent, but it's difficult to make sense of the pattern.

Changes over time ↑

Federica: You asked the same question about attitudes regarding algorithms in the 2016 Digital News Report. What has changed since then?

Richard: That's right, we we fielded the same set of questions in the same set of countries in 2016. And that allows us to compare the results from this year to those from several years ago. And what we found is we didn't see much change at all, actually, in the numbers. So despite everything that's happened in the intervening time - so not just politically, but also in terms of, you know, new platforms have emerged, new ways of selecting news and using algorithms emerged - we find the attitudes are broadly similar. We did see a slight decline in the proportion of people who said that each selection method was a good way to get news. And at the same time, we saw an increase in the middle neutral category. So neither disapproval or approval. And this has gone up slightly for the different methods of selection. I think this indicates perhaps that people are a little more ambivalent now than they were several years ago. And I think this this makes sense if we think about the fact that there are a wider range of platforms now [so] it's maybe difficult to have a clear view on whether algorithmic selection is good or bad, just because it's it's deployed in lots of different ways. It's more complicated.

Correlations with interest, trust and news use ↑

Federica: You also looked at how these attitudes towards new selection correlate with other attitudes towards news, including interest in news and trust level. What did you find?

Richard: This again links back to the point about generalised scepticism, because I think, one hypothesis might be that if you if you just focus on people who are very interested in the news, you might start to see this kind of diverging pattern, whereby they might be very pro editorial selection, but very against algorithmic selection. But again, that isn't what we find. We tend to see that among those with higher levels of interest, approval for all three selection methods tends to rise, and it tends to rise at about the same rate as when we look at people with different levels of interest. And the same goes for trust, so the more trust in news in general that people have, the more likely they are to say they approve of all three of these methods of news selection.

Federica: We also know from the Digital News Report a lot about the way that people say they're getting the news. So for example, whether they prefer to use platforms like social media aggregators that use algorithms, or whether they prefer to go directly to a publisher-controlled platform like websites or apps, which of course use editorial judgement to decide what news appears and where, although not just, in some cases. And what correlation did you find between these reported behaviours and the attitudes towards the news selection we're talking about here?

Richard: Yeah, so if you just look at people who say that they use platforms - which, as I said, platforms typically use algorithmic selection in some form - so if we look at the group that said they use platforms to get news, we might expect to see much higher levels of approval for algorithmic selection. We do find slightly higher figures, so sort of seven or eight percentage points higher, but not huge differences. And the sort of general pattern we've just been talking about stays the same. It's not the case that if you look at people who use platforms, you know, a majority will say that they they sort of think that automatic selection based on their past behaviour, for example, is a good way to get news, it's slightly higher, but the basic pattern that I've just described is still there.

Concern over personalisation ↑

Federica: A feature of news-via-algorithm is that each user sees something that is somehow unique to them. And this could raise questions about whether people are actually worried about missing out on news that others are seeing. You also mentioned, you know, that there's been talk in the past of things like filter bubbles. Can you help us understand a bit of this landscape? What do people say about this worry?

Richard: Well, we asked a set of two questions to try and get to the bottom of this. And so we asked people whether they're worried about firstly missing out on important information, if news is selected automatically. And secondly, whether people are worried about missing out on challenging viewpoints. So the numbers for these two questions are roughly the same and just under half say that they are worried about missing out in this way. I think it's interesting because you mentioned filter bubbles, and we've done a lot of research on filter bubbles, and we've tried to work out whether people really are missing out. And what we've done typically is look at whether people have more diverse news diets or not, if they rely on algorithmic selection. And by diversity, we mean the range of different outlets, or news brands that people come into contact with. And actually, what we typically find is that people who use platforms have more diverse news repertoires than those that don't. Again, this is interesting, because it's slightly different to what we asked about here. So we didn't measure, you know, different viewpoints or different kind of topics. But at least when it comes to viewpoints, we might expect, you know, the results to be similar, because we know that different outlets often have specific political slants, and so on. So we might expect to see the same thing. On topics, it may, you know, it may play out slightly, slightly differently. So in this sense, you know, the sort of public concern is perhaps sort of out of step with what we know from the empirical data.

Lessons for platforms and publishers ↑

Federica: What do you think tech companies should consider when looking at these attitudes towards the algorithms that they use?

Richard: I think it's important to keep in mind that although slightly more people say that they think automatic selection based on past behaviour is a good way to get news than say the same about selection by editors and journalists, it's important to keep in mind that approval for all of these is quite low. And I think the increased use of platforms for the news that we see in many countries across the world probably isn't an endorsement of their approach to news selection. We know that people use platforms typically for other reasons that have nothing to do with news, and they come across news when they're there. And I think the growth and the use of platforms for news is also partly about the sort of decline in more traditional ways of getting news. So I don't think they should take a huge amount of encouragement from the data.

Federica: What about the news publishers? Positive attitudes towards editorial curation of news seems to be no higher than what is done by an algorithm. What is the learning for publishers?

Richard: I think it's important for publishers to keep public opinion in mind on this. They may have hoped for big differences between algorithmic selection and editorial selection, but that isn't what we see. And I think what it means for publishers is that sort of straightforward appeals to that kind of selection expertise, if they're trying to increase trust, for example, might not work with large parts of the public because there are lingering concerns about bias, and people just don't have this sort of view that human selection is good, automatic selection is bad. I think in the end, editorial expertise is important and it is valuable. So I think the task perhaps is explaining to people why that is, what the value human selection adds to the process, and why it's important instead of just telling people that it must be important.

Federica: Thank you so much Richard for joining us today.

Listen to all episodes

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time