Generative AI and elections: why you should worry more about humans than AI systems

Supporters of India's Prime Minister Narendra Modi wear masks of his face, as they attend an election campaign rally in Meerut, India, in March 2024. REUTERS/Anushree Fadnavis

Prominent voices worry that generative artificial intelligence (GenAI) will negatively impact elections worldwide and trigger a misinformation apocalypse. A recurrent fear is that GenAI will make it easier to influence voters and facilitate the creation and dissemination of potent mis- and disinformation.

Despite the incredible capabilities of GenAI systems, we think their influence on election outcomes has been overestimated – and that there are good reasons that these systems will not upend democracy anytime soon.

In a recent paper we wrote for the Knight First Amendment Institute at Columbia University, we reviewed current evidence on the impact of GenAI in the 2024 elections and identified several reasons why the impact of GenAI on elections has been overblown. These include the inherent challenges of mass persuasion, the complexity of media effects and people’s interaction with technology, the difficulty of reaching target audiences, and the limited effectiveness of AI-driven microtargeting in political campaigns.

This article is an excerpt of that paper and addresses three key claims around GenAI and elections, based on recent evidence and the wealth of preexisting literature on technological change. We discuss these claims in turn, arguing that current concerns about the effects of GenAI on elections are overblown in light of the available evidence and theoretical considerations. You’ll find three more claims as well as sources in the full paper, which you can read here and download here.

1. AI will increase the quantity of information and misinformation around elections

The increase in the quantity of information, and especially misinformation, caused by GenAI uses could have various consequences, from polluting the information environment and crowding out quality information to swaying voters. Below, we dissect this argument.

The first premise is that GenAI will enhance the production of misinformation more than that of reliable information. If GenAI primarily supports the creation of trustworthy content, its overall impact on the information ecosystem would be positive. However, it is difficult to quantify AI’s role in content creation. The ways in which AI is used to support the production of reliable information may be more subtle than AI uses to produce false information, and thus more difficult to detect. For the sake of argument, we assume that AI will be used exclusively to produce misinformation.

The second premise is that AI-generated content not only exists but also reaches people and captures their attention. This is the main bottleneck: for AI misinformation to have an effect, it must be seen. On its own, misinformation has no causal power. Yet attention is a scarce resource, and the amount of information people can meaningfully engage with is finite, because time and attention are finite. So any piece of politically relevant information has to compete with other types of information, such as entertainment.

During elections, voters are already overwhelmed with messages and ads, making any additional content – AI-generated or not – another drop in the ocean. In low-information environments or data voids, where fewer messages circulate, AI-generated content could have a stronger impact. But even in such cases, it is unclear why GenAI content would outperform authentic content and other non-AI-generated content. If anything, the proliferation of AI content may increase the value and demand for authentic content.

The third premise is that, after reaching its audience, AI content will be persuasive in some way. While strong direct effects on voter preferences are unlikely, weak and indirect effects are conceivable. Elections are not only about votes but also about the quality of political debate and media coverage. Without swaying voters, AI could flood the information environment in ways that degrade public discourse and democratic processes.

We do not find the flooding argument particularly convincing either. Since most people rely on a small number of trusted sources for news and politics, misleading AI content from less credible sources would likely have limited influence, if it gets seen at all. If mainstream media and trusted news influencers do not misuse AI, why would AI-generated content from untrusted actors cause confusion?

Flooding is concerning when there is significant uncertainty about who to trust and when individuals lack control over their information exposure. Yet, in practice, international survey research shows that people still often turn to mainstream media despite low levels of trust and an expanding pool of content creators. In Western democracies, mainstream news outlets have so far shown restraint in their use of GenAI. While some media organisations are using AI to assist in news production and distribution, these uses have generally been transparent and responsible.

There is little evidence to suggest that mainstream news organisations are using AI to create misleading content or fake news. In fact, many outlets are taking steps to ensure that AI-generated content is clearly labeled and that editorial oversight remains in place. This responsible approach stands in stark contrast to fears that AI could dominate political news coverage and create mass disinformation. Instead, news organisations are leveraging AI tools to enhance journalistic processes, such as fact-checking or summarising data-heavy reports, rather than misleading their audiences.

The rise of news influencers, too, does not necessarily indicate a breakdown of the information ecosystem, although it presents a shift.

In France, for example, HugoDécrypte, the country’s largest news influencer, has grown into a respected media entity with mainstream, high-quality coverage. Trusted sources, whether they are news influencers or news outlets, have strong reputational incentives to appear credible, as their audience’s trust – and their reach – largely depends on it.

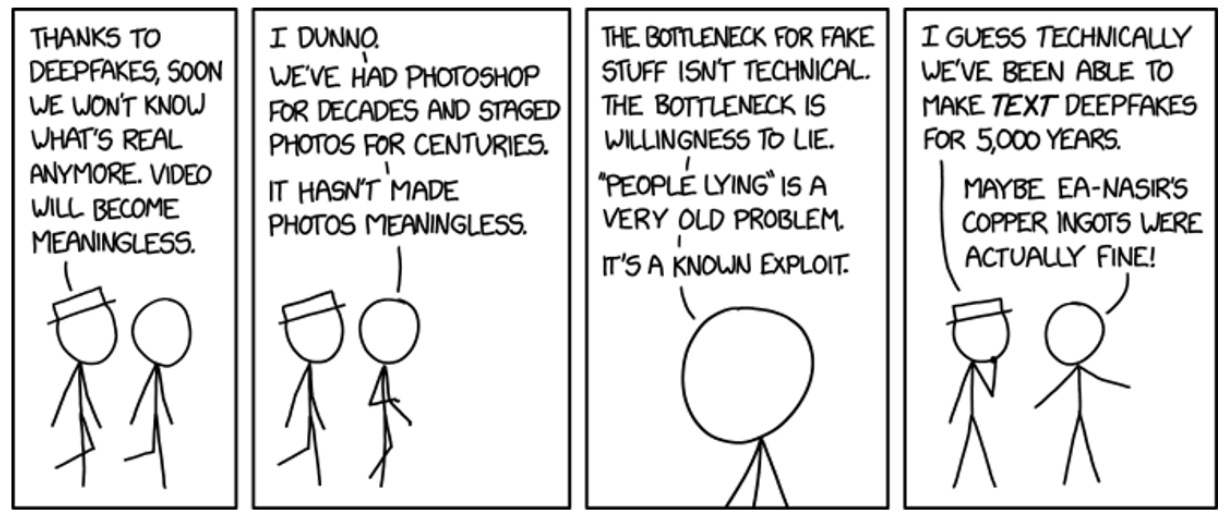

Using GenAI to mislead (and being exposed by competitors) would be a death sentence for most news sources. These imperfect but powerful reputational incentives largely explain why we generally try to avoid spreading falsehoods despite the ease of writing false text or making false claims.

In general, fears about AI-driven increases in misinformation are missing the mark because they focus too heavily on the supply of information and overlook the role of demand. People consume and share (mis)information because it aligns with their worldviews and seek out sources that cater to their perspectives.

Motivated reasoning, group identities, and societal conflict have been shown to increase receptivity to misinformation. For example, those with unfavourable views about vaccines are much more likely to visit vaccine-skeptical websites.

Sharing misinformation is often also a political tool, with especially radical-right parties resorting to it “to draw political benefits.’” And some people consume and share false information as a result of social frustrations, seeking to disrupt an “established order that fails to accord them the respect that they feel they personally deserve” and in hopes of gaining status in the process. Moreover, people do not even need to believe misinformation deeply to consume and share it, with some people sharing news of questionable accuracy because it has qualities that compensate for its potential inaccuracy, such as being interesting-if-true.

In addition, the people most susceptible to misinformation are not passively exposed to it online; instead, they actively search for it. Misinformation consumers are not unique because of their special access to false content (a difference in supply) but because of their propensity to seek it out (a difference in demand). The fact that demand drives misinformation consumption and sharing is perhaps the most important lesson from the misinformation literature.

As Budak and others write in this paper: “In our review of behavioural science research on online misinformation, we document a pattern of low exposure to false and inflammatory content that is concentrated among a narrow fringe with strong motivations to seek out such information”.

To wrap up, the presence of more misinformation due to GenAI does not necessarily mean that people will consume more of it. For an increase in supply to translate into additional effects, there must be an unmet demand or a limited supply. But the internet already contains plenty of low-quality content, much of which goes unnoticed. The barriers to creating and accessing misinformation are already extremely low, and we see no good reason to assume that people will show a higher demand for AI-generated misinformation over existing forms of misinformation.

Throughout history, humans have shown a remarkable ability to make up false stories, from urban legends to conspiracy theories. Misinformation about elections is easy to create. All it requires is taking an image out of context, slowing down video footage, or simply saying plainly false things. In these conditions, GenAI content has very little room to operate. Moreover, the demand for misinformation is easy to meet: Misinformation sells as long as it supports the right narrative and resonates with people’s identities, values, and experiences.

2. AI will increase the quality of election misinformation

The quality of AI-generated (mis)information has also sparked concerns about its potential to deceive people and erode trust in the information environment.

By quality, we mean that GenAI enables the creation of text, imagery, audio, and video with such lifelike fidelity that observers cannot reliably distinguish these synthetic creations from material produced through conventional human activity, whether written by an author, photographed with a camera, or recorded with a microphone. However, while information quality is crucial in many contexts – such as determining guilt in a criminal case – it plays a much smaller role in the acceptance and spread of misinformation, including in the context of elections.

The core premise of the argument is that the danger to elections stems from GenAI making election misinformation more successful and impactful by improving its quality—and that AI could facilitate this by lowering the costs of producing high-quality misinformation.

Let us look at this argument step by step. First, it is undoubtedly true that GenAI models can now produce high-quality misinformation. Plausible but false text, audio, and visual material are all within the purview of recent, widely available and usable AI systems. Will these AI systems be used to produce more high-quality misinformation than reliable information? Possibly, not least due to different reputational incentives.

News organisations and public-interest organisations depend on audience trust and brand reputation, so overt reliance on AI can backfire due to audience scepticism around AI and worries about damage to their trust and reputation from errors AI systems still make.

As a result, many outlets are still cautious in their AI use, which curbs its potential to be used too widely for fully AI-generated high-quality, true information. By contrast, misinformation producers face no comparable reputational constraint: faster, cheaper, and less labor-intensive production of high-quality material is an advantage for actors with little to lose from being caught fabricating content.

Moreover, professional journalism still enjoys stronger financial and institutional support than most misinformation operations, so the marginal value of additional cost reductions in producing high-quality content is lower for newsrooms than for bad-faith actors. For these reasons, we assume that reductions in the cost and turnaround time of high-quality outputs will confer proportionally greater benefits on misinformation producers than on reliable publishers – though GenAI can and is being harnessed to support responsible journalism as well.

However, this does not need to make a significant difference in the context of elections, for various reasons.

First, while GenAI undoubtedly enables the creation of more sophisticated false content and might benefit its producers more, it is not clear that higher-quality misinformation would actually be more successful in persuading or misleading people.

As we have already discussed, other factors – such as a demand for misinformation, as well as ideological alignment, emotional appeal, resonance with personal experiences, and the source – matter in determining who and why people accept and share misinformation. In other words: It is not just content quality that determines the spread and influence of misinformation. These factors will not be simply overwritten by an increase in content quality, and higher quality does not automatically lead to increased demand for misinformation.

Second, misinformation producers already have numerous tools in their arsenals to enhance content quality but still often resort to low- or even no-tech, low-effort approaches.

Image editing tools like Photoshop have long afforded people the ability to convincingly alter images or create new ones and “cheap fakes” , images taken out of context or plain false statements continue to persist and thrive. The main reason is that these basic forms of false or misleading information are ‘good enough’ for their intended purpose, given that they fulfill a demand for false information that is not primarily concerned with the quality of the content but rather with the narrative it supports and the political purpose it serves.

In other words: High-quality false information is not even needed. Political actors can simply twist or frame true facts in a way that supports their narratives – a technique adopted with frequency, including by (political) elites.

For instance, emotional (true) news stories about individual immigrants committing crimes are often instrumentalised by far-right politicians to support unfounded claims about migrant crimes and ultimately to justify anti-immigration narratives.

Moreover, there are trade-offs between content quality and other dimensions: Higher-resolution video can sometimes look too polished to feel authentic, while more realistic and plausible messages may be less visually arresting –truth is often boring and getting closer to it may inevitably make content less engaging.

Again, the problem is less the improvement in content quality than the demand for misinformation justifying problematic narratives. The source of misinformation also matters more than the quality of the content: A poor quality video taken from an old smartphone shared by the BBC will be much more impactful than a high-quality video shared by the median social media user. This is because people trust the BBC to authenticate the video. While GenAI can be used to increase the quality of the content, it can hardly be used to increase the perceived trustworthiness of the source.

In the future, GenAI tools will continue to improve and will certainly allow for more sophisticated attempts at manipulating public opinion. While we should keep an eye out and closely monitor and regulate harmful AI uses in elections, we do not believe that improvement in the quality of AI-generated content will necessarily lead to more effective voter persuasion.

Humans have been able to write fake text and tell lies since the dawn of time, but they have found ways to make communication broadly beneficial by holding each other accountable, spreading information about others’ reputations, or punishing liars and rewarding good informants. We expect these safeguards to hold even under conditions where content of lifelike fidelity can be created—and is available—at scale.

3. AI will improve the personalisation of information and misinformation at scale

A third common argument is that AI could enhance voter persuasion by supercharging the creation of highly personalized (mis)information, including personalised advertisements.

This argument is an extension of the concept of microtargeting to GenAI systems. Its popularity likely originates in the stories about the alleged outsized effectiveness of microtargeting in recent political contests, such as the supposed effects of the voter targeting efforts of political consultancy Cambridge Analytica during the 2016 European Union referendum and the 2016 US presidential election.

Microtargeting describes a form of online targeted content delivery (for example, as advertising or via users’ in-app feeds). Users’ personal data is analysed to identify the demographic or “interests of a specific audience or individual,” based on which they are then targeted with personalized messages designed to persuade them. GenAI, the argument goes, has removed constraints, thus allowing for the effective creation of individually personalised and targeted – and thus more persuasive – content at scale.

To understand this, we must briefly examine how GenAI systems enable the creation of personalised content. GenAI systems are pretrained on a large general corpus of data and then refined in a post-training stage by approaches such as reinforcement learning with human feedback (often abbreviated as RLHF).

However, current systems are unable to represent the full range of user preferences and values. It is also not clear how much information current systems encode about users themselves and how much this shapes the responses provided to users (even though this is happening to a degree), especially around political content. To our knowledge, OpenAI’s ChatGPT and Google’s Gemini are the first to incorporate a “memory” of a user’s preferences and potentially use these preferences to shape subsequent responses.

Beyond explicit ‘memory’ modules, models can also infer demographic and ideological traits from user prompts (given enough of the same) by leveraging correlations learned during pretraining. For example, experiments show that GPT-4 and similar LLMs are able to guess users’ location, occupation, and other personal information from publicly available texts, e.g., Reddit posts. This ‘latent inference’ route therefore complements, and may even supersede, memory-based customization, as systems can tailor replies for people ‘like you’ without needing explicit profile data.

In theory, any such system can be used to create content more aligned with the user’s worldview and preferences, content that should therefore be more persuasive. Personalised information is more convincing and relevant than nontargeted information and in many ways GenAI can help personalise information, potentially making it easier to persuade or mislead people.

However, there are several complications to this claim.

First, technical feasibility is not the same as actual effectiveness. The effectiveness of politically targeted advertising in general is mixed, with at best small and context-dependent effects. Previous studies on microtargeting and personalised political ads reveal that data-driven persuasion strategies often face diminishing returns without broader messaging alignment and credible, on-the-ground campaigning.

Experimental evidence from the US further shows diminishing persuasive returns once targeting exceeds a few key attributes. Sceptical voices would rightly argue that these findings do not take into account more powerful GenAI systems that can create personalized content at scale in response to customized prompts, all at little cost. However, evidence that the personalised output from AI systems is more persuasive than a generic, nontargeted persuasive message is thin.

A recent review of the persuasive effects of LLMs concluded that the “current effects of persuasion are small, however, and it is unclear whether advances in model capabilities and deployment strategies will lead to large increases in effects or an imminent plateau.”

Here and here Kobi Hackenburg and Helen Margetts found that “while messages generated by GPT-4 were persuasive, in aggregate, the persuasive impact of microtargeted messages was not statistically different from that of nontargeted messages” and that “further scaling model size may not much increase the persuasiveness of static LLM-generated political messages.”

The approach used to study such questions also makes an important difference: Studies measuring the perceived persuasiveness of (text) messages, by asking participants to rate how persuasive they find messages (e.g. this one), find much larger effect sizes than more rigorous studies that measure the actual change in participants’ post-treatment attitudes.

Similarly, the effect of political ads are much stronger when relying on self-reported measures of persuasion rather than actual persuasion, notably because people rate messages they agree with more favorably. In general, the persuasive effects of political messages are small and likely to remain so in the future, regardless of whether they are (micro)targeted and personalized, because mass persuasion is difficult under most circumstances.

Second, effective message personalisation plausibly requires detailed, up-to-date data about each individual. While data collection and data availability about voters is commonplace in many countries, including general data about traits that shape political attitudes and voting behavior, this is not uniform and consistent across countries.

For example, Kate Dommett and colleagues have found that parties in various countries routinely gather voter information via state records, canvassing, commercial purchases, polling, and now online tools, but the depth and type of data they can obtain depend heavily on each country’s legal frameworks, local norms, and the specific access rules of individual jurisdictions.

This data access is also shaped by the resources of political actors. Major parties generally have the resources to blend state files, purchased data sets, and digitally captured traces, whereas smaller parties often lack the funds or capacity to canvass intensively or pay for voter lists. Data about individual preferences, psychological attributes, and political views (or data associated with the same) are even more difficult to obtain. Even when user-level data needed for precise political personalization can be obtained, hurdles remain.

Datasets can be noisy and incomplete. Insufficient temporal validity cannot be ruled out, and weak links between the data and the attempted observed construct are possible. In addition, unforeseen external shocks not reflected in the data and other unobserved features not captured in the same—which would be meaningful for accurate personalization—compound these issues.

These challenges, which already hobble general attempts at predicting human behavior with traditional forms of predictive AI, will likely also complicate efforts to personalise content with GenAI systems in a way that leads to significant attitudinal or behavioral change, limiting the fidelity of any downstream personalized targeting.

Again, sceptical voices will argue that the data gathered directly from voters’ interactions with various AI systems or inferred from their interactions with the same will be superior in quality.

We think it is unlikely that this data would be exempt from these constraints or that it would become widely accessible outside of AI firms in a way that allows third parties (such as political parties and candidates or actors, or malicious actors) to easily create individually personalised messages that are then also targeted at the right person in various ways (not just within AI systems like chatbots but also in the form of ads or messages on other platforms). It is also unlikely that AI firms would allow such targeted personalization attempts within their own systems. Such data access and use would also be prohibited in many countries.

A schematic representation of how personalised messages could be delivered with AI. Illustration courtesy of the authors.

Theoretical pathways for the delivery of messages personalised with AI

Third, to be successful, a personalised message must reach the individual in question. This could happen in two ways (see the figure above).

In the first scenario, the message is delivered as part of the output of a GenAI system itself; for example, as part of a conversation with a user. However, so far, no AI or platform company has stated an intention to provide political parties (or other actors with political intentions) with access to their systems to allow them to create and deliver targeted personalized responses at scale to individuals (as was the case, at least to a degree, with Facebook during various political campaigns in the past).

Any such move would likely not go unchallenged and be subject to legal restrictions in various countries. In addition, it is questionable that most users would react positively to unsolicited political messages targeted to their preferences from AI systems.

In the second delivery scenario, a message is personalised using AI and information gathered about users and then delivered via existing digital platforms that they use daily. However, while GenAI systems (at least theoretically) reduce the cost of creating personalised information, it does not reduce the cost of reaching people individually via such means on these digital platforms. After all, targeting people with tailored messages online does not come for free, and certain audiences can be more expensive to reach and prices differ between political actors.

In addition, as mentioned earlier, attention is a scarce resource. Any piece of politically relevant information—including personalized messages or ads, regardless of their accuracy—must compete with other types of information for people’s attention. There is also evidence that the more people encounter ads that try to persuade them, the more skeptical they become of them and the worse they become at actually remembering the messages they have seen.

According to political campaigners, people often do not pay attention to personalized political advertisements. People also not only recognise such personalized messages but also actively dislike those that are excessively tailored or tailored using certain traits that are considered too personal. Their use can also lead to backlash in favorability if they come from parties that voters do not already agree with.

Fourth, the growing abundance of voter‐level data and increasingly sophisticated AI tools does not automatically ensure that political actors will deploy them.

Past microtargeting efforts offer a telling example: audits of 2020 US Facebook ads reveal that official campaigns used the most granular targeting mainly for highly negative messages, leaving much of the platform’s segmentation potential untapped. While political campaigns worldwide use targeted advertising, spending is mostly “allocated towards a single targeting criterion” (such as gender).

While wealthier countries and electoral systems with proportional representation see greater amounts of money focused on microtargeting combining multiple criteria, European case studies document legal, budgetary, and cultural constraints that have kept microtargeting in bounds.

Decisions in political campaigns (and in many other organisations) are also not solely, or even primarily, driven by data or new technological systems. Instead, human agency and organisational dynamics play a crucial role in determining when and why the same are, or are not, integrated into strategic decision-making. Organisational sociology theory helps explain this gap: Rather than acting as fully rational optimisers, political actors do not choose the absolute best option; instead, limited time, information, and ingrained habits lead them to pick the first solution that seems ‘good enough.’

For example, research on recent technology-intensive campaigns in the U.S. shows that party strategists often override model recommendations in favor of gut feeling, coalition politics, or candidate preferences.

Reinforcing this observation, a post-2024-election report from the Democratic-aligned group Higher Ground Labs observed that AI never dominated campaigning as some practitioners had predicted; most teams limited the technology to low-stakes tasks such as drafting emails, social posts, and managing event logistics, despite personalization with AI being technically feasible at the time (at least in theory). Only a handful ventured into more sophisticated uses like predictive modeling, large-scale data analysis, or real-time voter engagement, and even then, adoption was typically the result of individual experimentation rather than a structured organizational rollout.

The report stresses that many staffers simply lacked the know-how to push GenAI further and found little institutional guidance to help them do so, illustrating how entrenched routines, uneven skills, and weak organizational support continue to constrain the political uptake of advanced technologies.

A more recent survey found that while AI use among political consultants in the US is growing, it is mostly used for mundane admin tasks. All this is unlikely to be different for techniques enabling personalized messaging with the help of generative AI systems. In short, the beliefs, capacities, and priorities of political actors remain bottlenecks between what data and GenAI theoretically make possible in terms of personalization and what political campaigns actually do.

Fifth, and finally, the overall argument assumes that GenAI will be used to mislead more than to inform. However, AI chatbots will also be used by governments, institutions, and news organisations to inform citizens and provide them with personalized and reliable information (we discuss this further in the earlier section on mass persuasion). Personalised targeting with information is also not inherently anti-democratic; pluralist and deliberative theories of democracies hold that citizens participate most effectively when they receive information and representation that is salient to their lived interests and identities.

In this sense, AI-enabled tailoring can also fit into the long-standing democratic practice whereby parties canvass different interest groups with messages that match their specific concerns and build coalitions around such issues. Such tools could in theory also expand informational equality by delivering high-quality, language-appropriate content to communities that mainstream media often underserve, including linguistic minorities, first-time voters, and rural electorates. The democratic question is therefore less about whether personalisation occurs and more about whether citizens retain exposure to diverse viewpoints and qualitative information.

Conclusion

In short, while the anxieties around a GenAI in the context of elections are understandable, they are largely misplaced and consistently underestimate the stubborn realities of human behaviour and social dynamics.

The threat isn’t a tsunami of AI-generated content; it’s the pre-existing demand for information that confirms our biases. Flooding an already saturated information ocean with more drops of content – no matter how cheap to produce – does little to change who sees what, or why. Nor is the danger in higher-quality fakes. The effectiveness of misinformation hinges on the credibility of its source and its narrative appeal, not its technical perfection. A “good enough” lie that fits a political agenda has always been more potent than a perfectly rendered but irrelevant falsehood.

Finally, the promise of hyper-personalised persuasion at scale runs into the same bottlenecks that have always constrained political advertising: messy data, scarce attention, the high cost of delivery, and the simple, stubborn fact that it’s incredibly difficult to change someone’s mind.

We do not intend for this article to be taken as evidence that GenAI poses no risks at all, that policy responses are unnecessary, or that firms developing AI should receive carte blanche. We would also like to caution against either minimising or magnifying those risks on the basis of what is, at present, still a thin empirical record in some respect. While we think that the existing empirical and theoretical evidence shows that GenAI is unlikely to have outsized effects in the future, further empirical research across different political and cultural contexts is sorely needed to better understand where the risks lie and how they can be best addressed.

Ultimately, the discourse on AI and elections focuses too much on the technological supply and not enough on the human demand. While we must remain vigilant and adapt our regulatory and educational frameworks as we go along, we should not mistake powerful new tools for all-powerful forces. The core challenges to democracy – attacks on electoral systems and democratic freedoms by anti-democratic elites or declining trust in institutions – are fundamentally human problems. GenAI may be a new actor on the stage, but it is not rewriting the script.

This excerpt was adapted from the paper 'Don’t Panic (Yet): Assessing the Evidence and Discourse Around Generative AI and Elections', published by the Knight First Amendment Institute. The full version, which also contains all the references, can be found here.

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time

signup block

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time