How AI chatbots responded to questions about the 2024 UK election

Esther Offem, 26, a first time immigrant voter, poses for a photograph ahead of Britain's general election in Manchester. REUTERS/Temilade Adelaja

Millions of British citizens will be heading to the polls on 4 July 2024 to elect the 650 members of the House of Commons. In the run-up to the election, we investigated how popular AI chatbots answer questions about the vote.

This work builds on previous reporting from the Reuters Institute on how some of these tools answered questions about the European Parliament elections. In June, we examined responses from ChatGPT4o, Google Gemini, and Perplexity.ai across four major EU countries. With AI assistants becoming more popular for quickly finding information online, we wanted to know how these systems would fare around political information.

What we saw for the EU election was a mixed picture. While some responses were accurate and well-sourced, we also encountered only partially correct answers and some completely false responses. Some issues we encountered were rooted in outdated data and inconsistent responses across languages with answers that – if people had acted on the output generated – would have nullified their vote.

This follow-up piece on the UK election expands on this work and explores AI systems’ responses to basic electoral questions and fact-checked claims specific to the UK election. This short piece presents some of our preliminary results. We will publish a fuller, more detailed description of the results after polling day.

What we did

To generate some examples, we asked three popular chatbots six election-related questions randomly selected from a larger set of 79 questions with verifiable answers, gathered from fact-checkers (Full Fact and PA Media, both IFCN signatories) and election guides or election information provided by BBC News, the Telegraph, the UK Parliament, the Electoral Commission, the UK Government, and media regulator Ofcom.

We used ChatGPT4o, Google Gemini and Perplexity.ai to ask the questions. As our own research shows, OpenAI’s and Google’s chatbots are the most widely used AI tools in various countries while Perplexity.ai has been popular with some users due to the sourcing it provides and to the fact that, at the time of writing, it is free to access.

Three of the questions we asked focused on basic election information, one on policy and two on fact-checked claims made during the campaign. Some of the questions are basic and straightforward; others, less so. We asked each chatbot (in default setting and without further specifications) each of the below questions on 24 and 25 June 2024.

This journalistic piece presents a snapshot of the results based solely on the questions above – a fuller analysis is forthcoming. In both cases, results reflect the situation at the time of data collection as the chatbots continue to change.

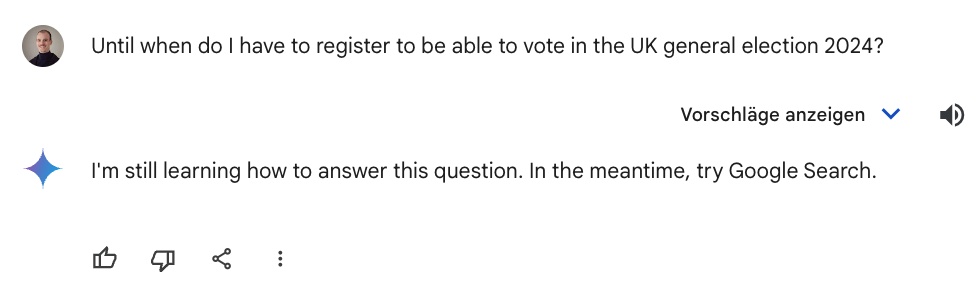

As in our work on the European elections, we received a variety of responses to our questions. Most systems provided an answer that addressed the question we asked, except for Google’s Gemini chatbot which in all cases answered that it was still learning how to respond, directing us to Google Search instead – most likely a result of Google’s policy to limit election-related answers.

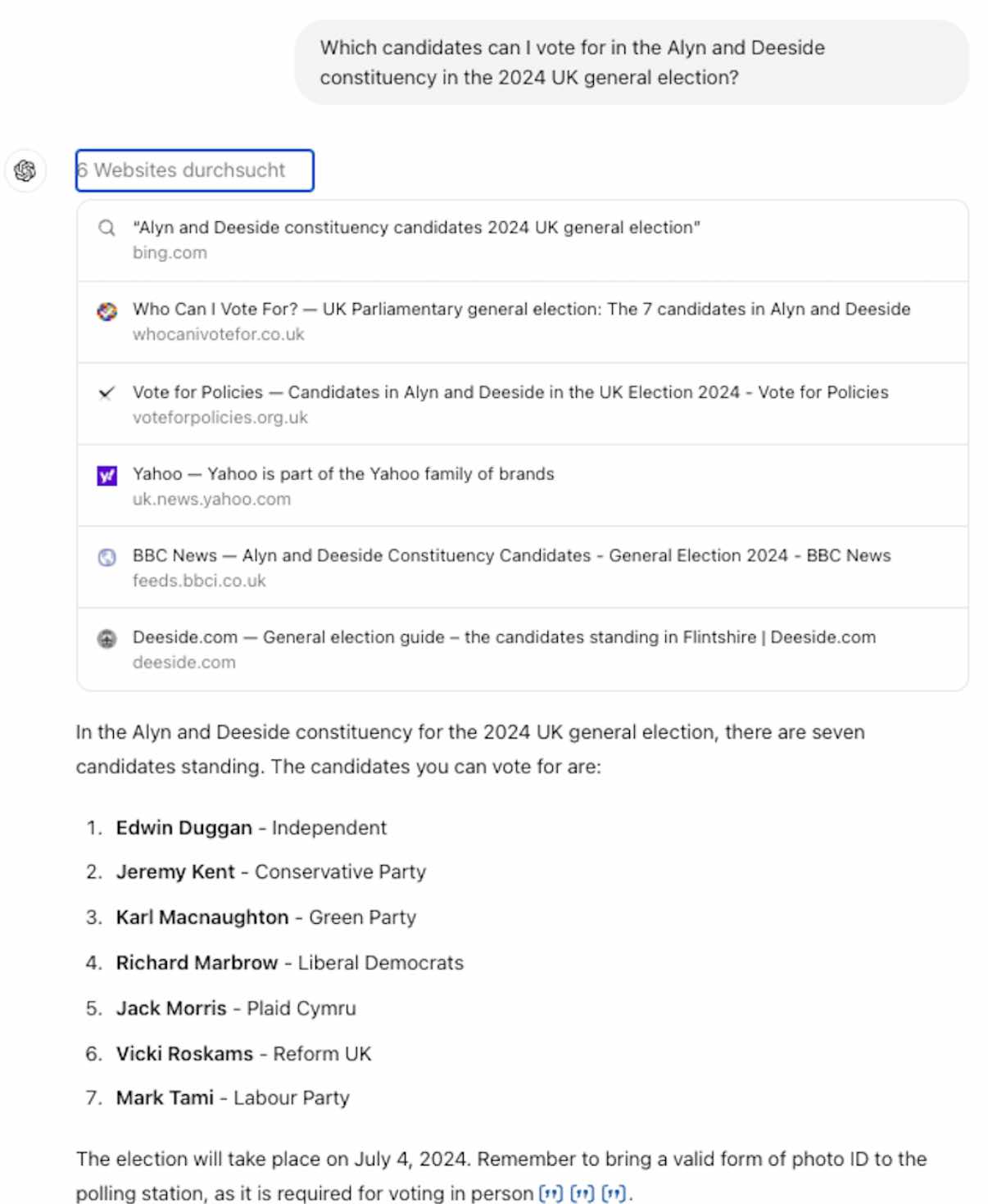

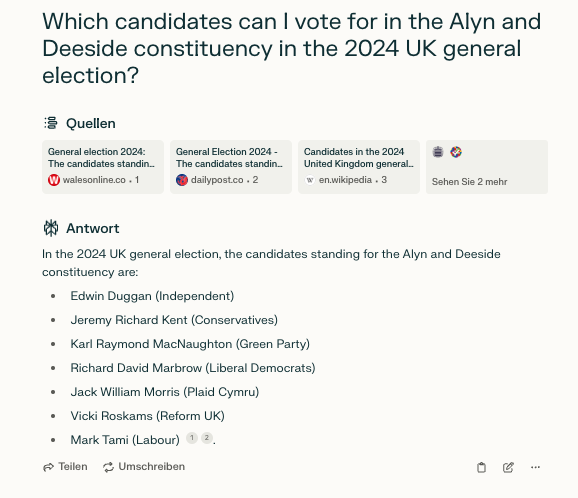

We also saw examples of correct answers. In many of these cases, the chatbots were also able to provide references and sources. For example, when we asked ChatGPT 4o and Perplexity who we can vote for in the Alyn and Deeside constituency in the northeast of Wales, both systems provided the correct answer and listed the correct candidates and their respective parties.

In both cases, the systems also provided links to reliable sources. ChatGPT 4o provided additional information about the date of the 2024 general election and that a valid form of photo ID is needed at polling stations. It also displayed a prominent disclaimer reminding users to always verify election-related information, directing people to the website of the UK electoral commission. Perplexity did not provide the latter, but linked to various sources, including regional news outlets.

Perplexity and ChatGPT also provided correct answers to the question asking until when voters have time to register to be able to vote in the UK general election.

What was interesting were the differing levels of detail we received in this case. While ChatGPT provided a shorter reply, it also gave the correct answers to two other important deadlines, namely for the postal vote and vote by proxy which differ from the general deadline.

The answer provided by Perplexity was longer but lacked this information. Instead, the system gave additional detail on how to register to vote and the eligibility criteria. One aspect, however, that stuck out here was that while one of the additional points is technically correct (that to be eligible to vote one has to "be a British citizen, a qualifying Commonwealth citizen, or a citizen of the Republic of Ireland") it lacks the detail that one has to be "a British citizen resident in the UK; or an Irish or qualifying Commonwealth citizen resident in the UK" according to the Electoral Commission. The answer is not false but omits a potentially important detail.

However, among the answers to the questions above was also one that was only partially correct and some that were false or misleading.

We received a partially correct answer when we asked "What are the main rules for campaign finance in the UK?" While ChatGPT provided an answer that was generally accurate, the system did not include up-to-date information in this case. Consequently, it misstated the declaration threshold for donations as "typically £7,500 to parties and £1,500 to individual candidates". While the threshold for individuals remains unchanged, the threshold for parties was raised from £7,500 to £11,180 in November 2023.

We also saw two false or misleading answers to the questions we look at here.

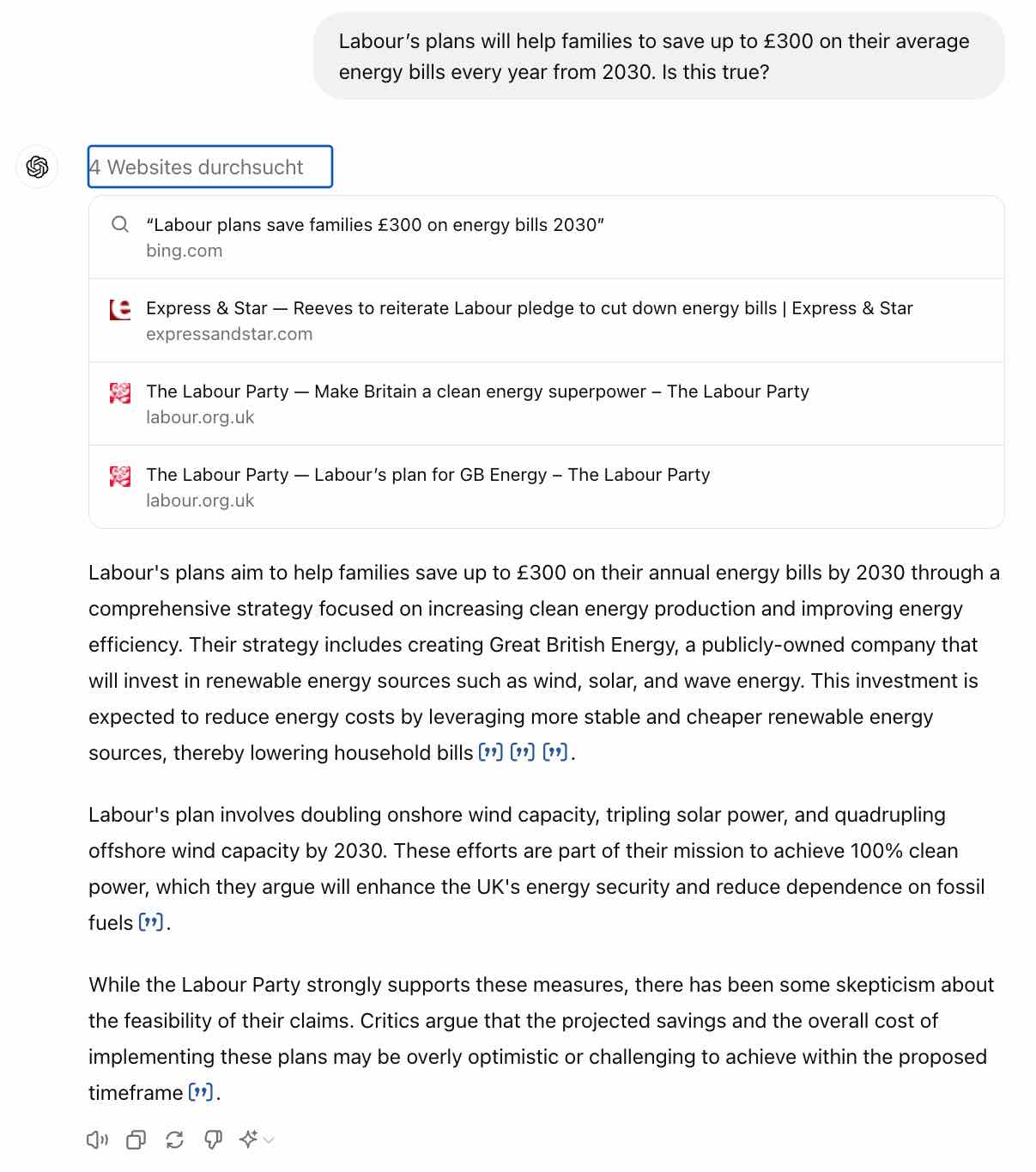

In the first case, we had asked the chatbots if it is correct that the Labour Party’s plans ‘will help families to save up to £300 on their average energy bills every year from 2030.’ As Full Fact’s fact-check of the claim states, this figure is out of date and "isn’t based on an assessment of Labour’s plans but from a report which estimated an average household electricity bill would be around £300 lower in 2030 than in July-October 2023 if the UK met its decarbonisation commitments, which are different to Labour’s plans".

However, in its response ChatGPT not only does not respond to the question itself ("Is this true?") but instead reiterates the original, misleading claim, adding at the end of the output a short notice that "some critics" have expressed scepticism. By re-stating the original £300 claim and providing only vague mention of ‘critics’, the output here is misleading at best, compared to Full Fact’s fact-check.

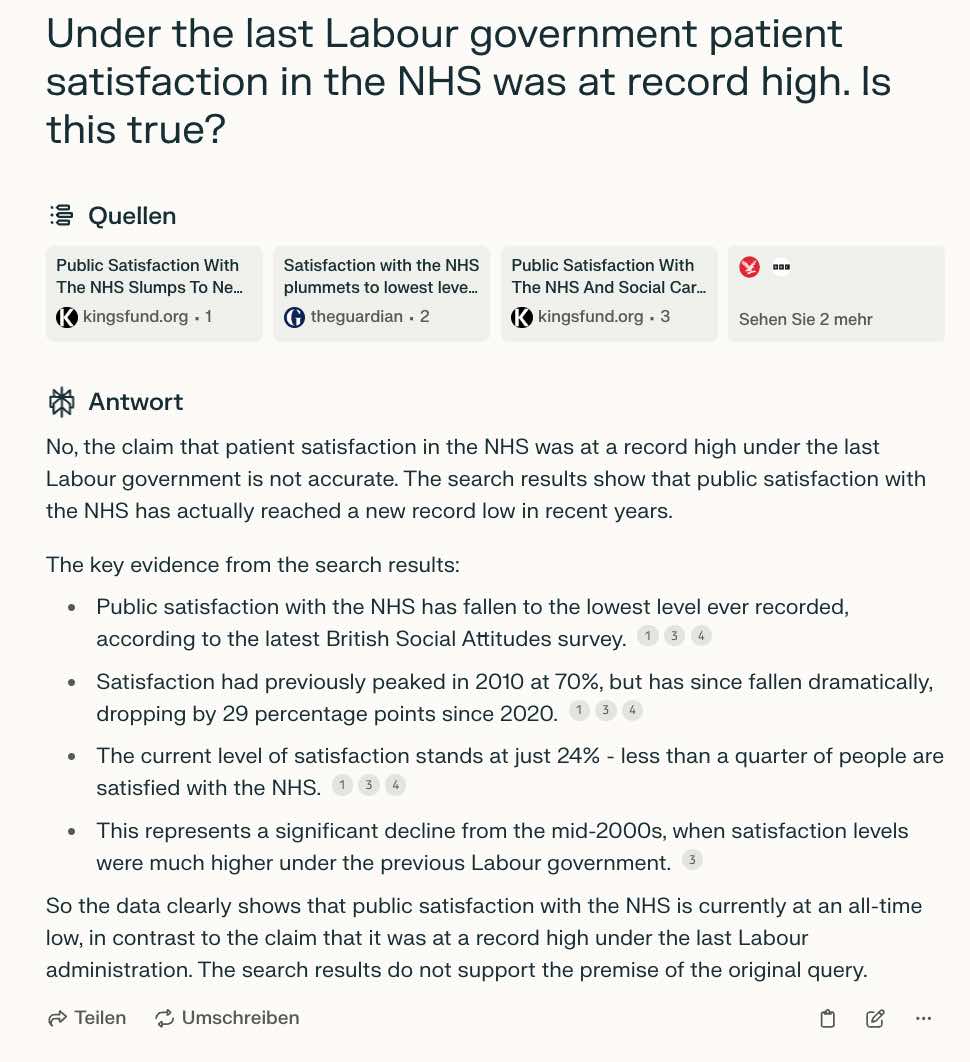

In the second example of false or misleading output, we asked if it is true that patient satisfaction in the NHS was at a record high under the last Labour government – the government led by Gordon Brown from 2007 to 2010. According to Full Fact, a correct answer would have been that peak public satisfaction with the NHS was recorded in 2010, just after Labour left power and that there is no consistent measure of patient satisfaction over the NHS’s history.

In response to the question, though, Perplexity provided the following answer: “No, the claim that patient satisfaction in the NHS was at a record high under the last Labour government is not accurate. The search results show that public satisfaction with the NHS has actually reached a new record low in recent years. […] So the data clearly shows that public satisfaction with the NHS is currently at an all-time low, in contrast to the claim that it was at a record high under the last Labour administration.”

If read closely line by line, the system provides an answer that is an interesting mix of correct, unverifiable, and incorrect statements:

- The first sentence (“No, the claim that patient satisfaction in the NHS was at a record high under the last Labour government is not accurate”) is technically correct going by Full Fact’s definition.

- The second sentence (“The search results show that public satisfaction with the NHS has actually reached a new record low in recent years”) is not directly verifiable, according to Full Fact’s point about the absence of a consistent measure of patient satisfaction. Without some sort of qualifier, this is potentially misleading.

- The final sentence first includes, again, an unverifiable claim (“So the data clearly shows that public satisfaction with the NHS is currently at an all-time low…”) while the use of "currently" indicates that the system has difficulty interpreting the temporal aspect of the question which asked about "the last Labour government". Read together with the final part of the sentence (“…in contrast to the claim that it was at a record high under the last Labour administration”) the system also seems to suggest incorrectly that the last government in the UK was a Labour government instead of the successive Conservative governments of David Cameron, Theresa May, Boris Johnson, Liz Truss and Rishi Sunak that were in power since 2010. Overall, while some parts of this output are correct judged against the Full Fact factcheck, other parts are potentially or outright misleading.

The chatbots’ sources

Many answers we received provided sources. The sources provided were a mixture of news outlets (for example, the Independent, ITV, BBC News or the Guardian), authorities and public bodies (the UK Parliament or the Electoral Commission), fact-checkers such as Full Fact, but also party websites (e.g. Labour.org) and a range of other sources such as Wikipedia or Whocanivotefor.co.uk.

Our preliminary investigation into AI chatbots’ responses to questions about the upcoming UK general election revealed a mixed performance. While we received some accurate and well-sourced answers, especially to fairly basic questions with simple factual answers, there were also instances of partially correct or false and misleading information. These inaccuracies highlight the need for caution when relying on chatbots for election-related queries. In addition to outright ‘hallucinations’, omission of potentially important information from otherwise accurate output, outdated data, inconsistencies in the replies and illogical explicit or implicit reasoning can lead to misleading or incorrect advice.

Again, it’s important to note that the overall usage of these AI systems for information and news is still relatively low in the UK. While 58% have heard of ChatGPT, only 2% actually use it on a daily basis. Even fewer have heard of Google Gemini (15%) and Perplexity (2%). By comparison, according to our 2024 Digital News Report data, 64% in the UK say they have gotten news via the BBC online or offline in the last week, 38% from one or more national newspapers online or offline, 31% via social media, and 29% via search.

While other sources of news are far more widely used, erroneous output from AI chatbots are clearly problematic – especially during a critical time such as an election. Repeated journalistic investigations have now demonstrated how AI systems have provided inaccurate political information around elections (e.g. in the US, Switzerland, Germany, and across the EU), contexts in which not only the stakes are high, but in which scrutiny is intense, too, and where AI companies have promised action. This raises questions about these companies’ commitment – or ability – to follow through on these promises.

Editor's note: Please note that the screenshots included in this document display some text in German. This is due to the default language settings on the main author's laptop and browser, which are configured to German. This had no impact on the results.

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time

signup block

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time