Engaging audiences in the age of ChatGPT: 4 lessons from the Trust in News conference

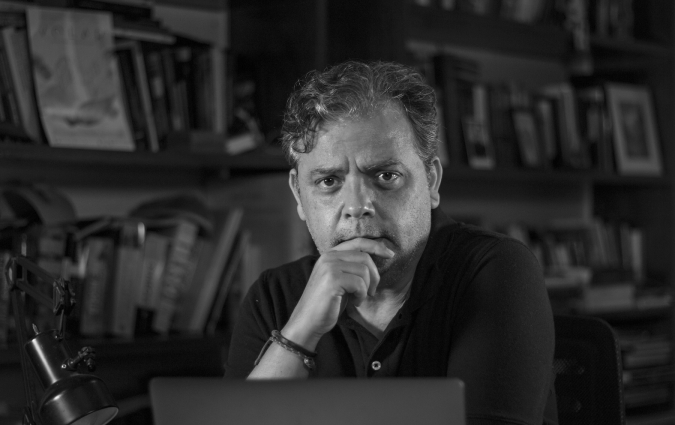

BBC's Disinformation and Social Media Correspondent Marianna Spring speaks with Deborah Turness, BBC News and Current Affairs CEO. Credit: Robert Tomothy/BBC

How can media organisations and platforms build trust through transparent journalism and technology? That’s the question at the heart of the 2023 Trust in News conference organised by BBC Academy Fusion on March 30.

“If you know how it’s made, you can trust what it says. Trust is earned,” said Deborah Turness, CEO for BBC News and Current Affairs, who kicked off the conference with a discussion on how pulling the curtain back on journalism by being transparent around editorial practices and guidelines is key in building trust with audiences. The conference then followed discussions with leading experts on disinformation, verification and open-source journalism to look at trust in news during a changing information landscape. Here are some main takeaways from the conference.

1. Open-source and verification tools can counter disinformation

To counter propaganda and disinformation spread by the Russian state, panellists Philip Chetwynd and Belén López Garrido detail how journalists now must go above and beyond to report on the truth. “It’s not good enough being an eye-witness, you need something more substantial,” said Chetwynd who is the Global News Director of Agence France-Presse.

Both López Garrido and Chetwynd detailed how they use metadata, satellite imaging, open-source intelligence, in addition to traditional on-site reporting in order to counter Russian propaganda. López Garrido, who is a news editor with the European Broadcasting Union, described a collaborative investigation on Ukrainian missing children in Russia where reporters even used the propaganda shared by the Russian state as a tool to separate the lies to get to the facts.

2. User-generated content is a great resource but must be verified

Rozita Lotfi, Head of BBC Persian Service, and Sina Motalebi, Digital Editor BBC Persian Service, detailed how they have been relying on videos and images from social media to report on the protests in Iran as the BBC and other independent media outlets are not allowed to report from the country.

Relying on user-generated content, however, comes with challenges associated with objectivity and trust. “There is a selective nature in UGC,” said Motalebi. “We need to be mindful of that when we are telling the stories of the events in Iran through the eyes of people on the street.”

In addition to objectivity challenges, a lot of care is taken in verifying what is depicted on the images and videos. Lotfi and Motalebi said that they employ satellite imaging, street pictures, and traditional reporting techniques to be able to verify user-generated content.

3. Technical problems require technical solutions

Panellists like Jenks and BBC’s Chief Technical Advisor Antonia Kerle as well as CBC Senior Advisor Bruce MacCormack propose technical solutions to improve trust in news in a context of emerging technologies.

What if seeing isn’t believing? Jenks, Kerle, and MacCormack are part of the team behind Project Origin, a collaborative project between media and tech organisations that wants to develop signals, like cryptographic verification marks, that would be tied to media content to prove the authenticity and source of a given piece of content, like an image or a video.

4. Generative AI is about to challenge journalism in new ways

New generative AI tools mean that synthetic content is now easier and more accessible to produce. While the most popular uses of generative AI so far are for satire and entertainment, their uses and the technology is increasing exponentially. “As these tools get even better, flaws are trained out, fact-checkers and teams around disinformation are also going to start to struggle to pick up on these signs,” said deepfakes and generative AI expert Henry Adjer.

Adjer told BBC News’ disinformation editor Rebecca Skippage that disinformation loves a vacuum. In other words, news organisations are going to have to balance reporting on misinformation in a timely manner while properly verifying information.