How media managers think AI might transform the news ecosystem

A view shows banners on the Tel Aviv University campus where Sam Altman, CEO of OpenAI, was due to speak in June 2023. REUTERS/Amir Cohen

The news industry is talking about AI. This is unsurprising, because AI models like ChatGPT seem to be capable of automating many of the cognitive tasks that underpin journalistic workflows, and those models seem to be steadily improving.

There is a sense that news may be on the cusp of great change, and that some of what is traditional and familiar in journalism may be subsumed into new, more automated ways of gathering, producing, distributing and consuming news.

As news producers learn about and experiment with AI, their early projects and prototypes tend to focus on opportunities that are well understood and that offer early returns on investment. These generally involve the automation of existing tasks within existing workflows, and essentially help to make the existing process of news production more efficient.

But behind this early focus on efficiency looms another question: how might AI fundamentally change the entire news ecosystem? Might some of the early AI-enabled developments that we see now – generative search, AI-enabled aggregation, consumer control of media experiences via AI, true conversational interfaces, easy consumer access to media-creating AI tools, task-based AI agents and AI-assisted newsgathering – be the precursors of a comprehensively different AI-mediated news environment? If so, then what might that look like?

This was the topic of a discussion at a gathering hosted by the Reuters Institute in early January. The 33 participants were managers directly engaged with innovation in journalism within large media organisations.

The session began with a short review of recent developments in large language models and other forms of AI, the range of core functionality that these models now provide and the early impact of that functionality on news. The participants then split out into three groups, where media managers were asked to focus on structural changes for the news ecosystem while considering different scenarios and avoiding wishful thinking. Following those discussions, all participants gathered to review and discuss the themes that emerged in each group. These are some of the themes discussed.

Most managers see AI as an existential threat

There was a clear consensus that AI presents the news industry with a very real risk of partial or near-complete obsolescence.

Statements like “it is an existential threat to all of us” were common and were particularly focused on the question of how news organisations could capture the value they create in an AI-mediated information environment. The ability of Large Language Models (LLMs) to use news content as informational raw material to produce other news experiences seemed to be top of mind, with comments like “our content has been stolen” and “it’s an IP issue” being prominent.

Several people highlighted the decline in referral traffic occurring simultaneously with the rise of AI competition as something particularly concerning, with one participant saying that “online is the new print” and suggesting that it might become difficult to get news audiences to visit news websites directly.

Some participants even raised the possibility that AI models tightly coupled to social networks might emerge as competing news providers. In the face of these risks some participants worried about the reluctance of news publishers to change or innovate, or to pursue only incremental or efficiency-focused opportunities that won’t matter in the long run. As one participant put it, news companies might risk being “busy dying”.

Is it possible to maintain the status quo?

Discussions of threats were often accompanied by consideration of options for preserving some of the status quo of news publishing in an AI-mediated information ecosystem.

The scenario of using AI to enhance what news organisations currently do without fundamentally changing, was considered, with the extreme example of a “one-editor news website” provided by one participant. Others questioned the sufficiency of relying solely on efficiency as a strategy for addressing the threats from AI, referring to efficiency as potentially just “a faster horse” in a world turning to automobiles.

There was considerable discussion of the so-called ‘human touch’ and the value of human intelligence and talent applied to news, especially as LLMs and other models were trained largely on huge volumes of median quality content. One participant pointed to an AI-generated news video shown in the briefing as something “full of stereotypes”. Some were confident in the persistence of the value of talented human writing, with one participant stating that “there will always be huge demand for that definitive view of the world as presented by us” and another describing a temporary opportunity for providing “retro news” in an increasingly automated and confusing media environment.

The conversation also turned to ways in which news, or at least newsgathering, might be funded indirectly by the rising players in an AI-mediated ecosystem. Examples of recent deals made by OpenAI with the Associated Press and Axel Springer were mentioned, as were regulations in Australia and Canada compelling social media platforms to pay publishers for news content. But questions were raised about how many such deals OpenAI or others might make, and about the prospect that “any value we can get [would] cannibalise our business models”.

Participants also discussed risks inherent in this model, with one citing the earlier dependance of some publishers on payments from Facebook to produce Facebook Lives and the subsequent trauma when those payments disappeared overnight.

In general, newsroom managers felt it would be difficult to use AI to preserve the status quo by making it more efficient. But several suggested an efficiency-focused use of AI could provide a “runway” period during which news producers might learn to adapt to an emerging AI-mediated information ecosystem.

What might news publishers do with AI?

Enthusiasm for using AI to do journalism in new ways was also quite common among participants. Some of the examples mentioned included AI-augmented local reporting, hyperlocal stories from data and opportunities to make long story streams developed over many years more accessible through summarisation.

Some pointed to improved CTR and engagement as a result of AI-generated summaries, and one participant observed that audiences who read these summaries were more likely to read the pieces in full. Others suggested that pieces could be adapted to different demographics and mentioned the opportunities AI translations provided for global news organisations and the potential of producing audio experiences using synthetic voices.

The potential for using AI to get new value from the archives of news providers with long histories was discussed, particularly in specialties like sports, cooking or finance and possibly as part of a “data and unique content” bundle or “suite of products” that combines manually-produced and AI-produced material.

What is the essence of what journalists do?

As might be expected, discussions returned time and again to the essence of professionally produced journalism and to how AI-generated content might disrupt it.

The rough consensus amongst participants was that this essence was centred on two concepts: facts and voice.

The centrality of facts (or “things I found out as a journalist”) as a differentiator included the production of new facts, the interpretation of facts and the trust by communities in those facts. As one participant stated, “Younger generations will be able to produce articles in five minutes with ChatGPT. The challenge will be producing facts”.

The centrality of voice (or “how I communicate and contextualise”) as a differentiator included personality beyond that available from the median AI-generated text, authentic writing from human experience and the visceral power of “voicy news”. In a media environment infused with AI-generated content, someone suggested that “the power of voice and personality” might become even more valuable.

“Is AI-created content really engaging?” questioned another media manager, referring to existing AI-generated content. Many responded it wasn’t, but some acknowledged it might become so.

Beyond facts and voice, there was some discussion of curation and editing services as specialised, differentiated value on top of content, especially if ‘hallucinations’ continue to plague the output of LLMs. One participant also pointed to the deep value of an ability to narrate and “to take responsibility for judgement”, while pointing out many news publishers don’t do this right now.

A few glimpses of an AI future for news

Much of our discussions looked at possible scenarios for how AI might fundamentally change the news industry in the coming years and decades.

Many mentioned the potential for a severely degraded information ecosystem, filled with “fake news and unreliable stuff” in the words of one of the participants. Others considered the potential for a much more competitive environment for news, with new AI-driven competition coming from ordinary people, personalities and microbrands equipped with AI tools, from existing aggregators and platforms and from storied brands embracing aggregation.

One comment in response to this suggested that the potential “fragmentation” of the news environment was actually a return to a period before the rise of the mass media, around 150 years ago. Media managers made arguments for both increased competition and increased collaboration within the news industry, envisioning an increasing rivalry for sources and scoops but perhaps also “shared news gatherers and fact checkers.”

One scenario many saw as likely was that AI would do “all commodity news”, especially “the SEO stuff” that today drives considerable traffic for some publishers, but also much of the day-to-day output of news agencies. The question of what exactly “commodity news” is was also discussed, without clear conclusions. A variant of this scenario was ‘the X scenario’ – the potential of a platform such as X with “easy access to original sources” to generate “news without much news publishing”.

Another scenario with considerable agreement was that of “extreme personalisation” of news consumption, although some participants questioned how far this should be pursued by news providers. “Should we chase this summarised, personalised world or does that miss the value?”, asked one participant.

The question of how and whether “integrity, truth [and] service value” might fit within this scenario was discussed, including caution about wishful thinking. It was pointed out that there was evidence that younger audiences were generally more comfortable with algorithms and personalisation, that the consumption preferences of young audiences were often dependent on context, and that AI-enabled reversioning and customisation might fit well with this.

A participant pointed out that research identified “personalisation, participation and personality” as the key elements of a news consumption experience, and that AI could support both personalisation and possibly participation (via interactive experiences and facilitation of conversation) if publishers were to “lean into it”.

In a comment that resonated with the group, one participant stressed the risk of news publishers becoming “opera houses” – elite, highly subsidised experiences consumed by relatively few people while “the popular music [of news] is played somewhere else”.

This was not a conversation with clear, well-defined conclusions. But there was a rough consensus that the scenarios for what AI might do to news depended on two significant questions.

The first was about how audiences would eventually react to AI-generated content – whether they would find it to be boring, “cold [and] unreliable” or “super convenient”? More broadly, this was also a question about “what does an informed public choose?” regarding its access to and use of information.

The second was about how AI models would eventually handle the recency of data, including their ability to assess, analyse, contextualise and narrate recent, fast-changing information, possibly from multiple sources. AI has not yet demonstrated the ability to do this with anywhere near the sophistication of journalists.

What we can learn from conversation itself

These discussions reveal that the conversation about where AI may be taking journalism over the long term is still at a nascent stage. They suggest that the news industry is generally well aware of the potential for rapid, transformative change in the news ecosystem as a result of AI, that it has some early ideas about where this change may appear, and that it is willing to explore opportunities from AI as well as to react to or mitigate its threats.

Unsurprisingly, though, this conversation is still largely a reflection of the current competitive environment and is also partly centred on vaguely defined concepts such as “the human touch” and on aspects of journalism that may not be as relevant as they once were for some publishers, such as “shoe-leather reporting”.

There was some concern that conversations within newsrooms might be insufficient for the moment, with one participant mentioning the “depressing” interaction within an AI task force. Much of the discussion was relatively ‘ad hoc’ rather than systematic, and there seems to be no indication of a clear “theory of value” for news in an AI world emerging yet.

Despite these limitations, there was a sense that this may be the time when the rules of AI news get laid down and that it may be important to define the end state that the industry would like to see and work backward from there. What will the emerging ecosystem look like? And, as one participant said, “what is the role of my brand in that ecosystem?”

A wider conversation?

Short, informal discussions like these may be useful as indications, but shouldn’t be expected to systematically reveal deep insights and foresight about how AI might structurally change the information environment. There are, however, much more methodical techniques available for understanding possible futures in uncertain situations.

One of these is ‘scenario planning’ – a formal process that gathers the collective input of a relatively large number of diverse but informed individuals and reduces that accumulated counsel down to a small set of distinct and mutually exclusive possible scenarios that ideally cover the space of possible outcomes.

Scenario planning as a practice was developed at Rand Corporation and Shell in the 1970s and 80s and is now a commonly used tool for governments, corporations and other large organisations that need to plan over decade-long horizons in uncertain conditions. Scenario planning has been applied to news in the past, including in the recent News Futures 2035 project, but not since LLMs and generative AI emerged as a credible alternative to human cognitive work.

An upcoming project, called AI in Journalism Futures (AIJF), is attempting to apply scenario planning specifically to the possible ways that AI might fundamentally change news over the long term. This project combines an open competition, in which the entries are short descriptions of potential scenarios, with a formal scenario planning process in which the participants are the winners of this competition.

The project is launching in January 2024 and will conclude in April 2024, with the results published in May. The AIJF project is funded by the Open Society Foundations’ Media and Disinformation group, and anyone interested in participating can do so here.

Many in the news industry found 2023 to be a confusing and even challenging year, in which new AI functionality seemed to appear weekly, speculation was rife and clarity about what it all meant was hard to find. By early 2024, however, the pace of dramatic new launches has slowed, the frenzy of hype around AI has abated a bit, we have all learned more about what these tools and technologies can actually do, and discussions about risks and opportunities are more grounded.

This is probably a good time, therefore, to begin a conversation about what journalism might look like in a world of ubiquitous AI – a conversation that will likely be ongoing for many years. Much like the discussion at the Reuters Institute, this conversation would ideally be open, frank and without wishful thinking. To move forward into whatever our AI future holds, and to have agency within that future, the news industry should keep talking about AI.

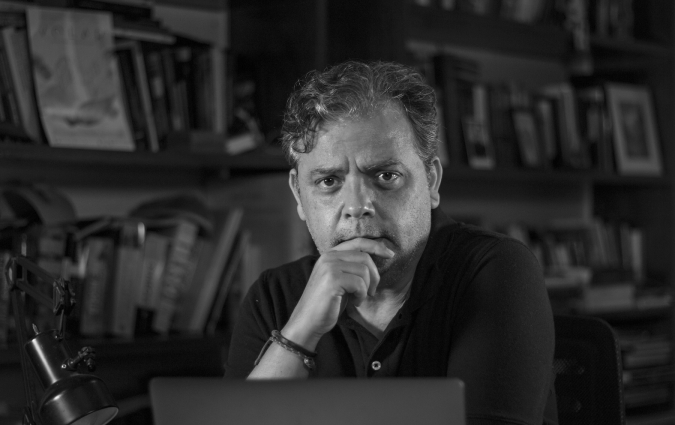

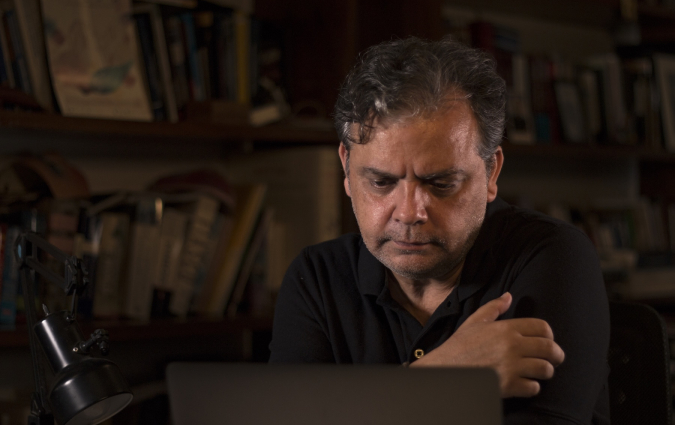

David Caswell is a consultant, builder and researcher focused on AI in news. David has led news product innovation at the BBC, Tribune Publishing & Yahoo! and publish peer-reviewed work. He is a regular speaker of our newsroom leadership programmes.

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time