AI deepfakes, bad laws – and a big fat Indian election

Supporters of BJP react after Prime Minister Narendra Modi's roadshow in Ayodhya, India, in December 2023. REUTERS/Anushree Fadnavis

In February this year a post on social media platform X set off a virtual storm in India. When Google’s AI tool Gemini was asked whether Prime Minister Narendra Modi was a fascist, the AI tool said he was “accused of implementing policies some experts have characterised as fascist.”

Gemini added that “these accusations are based on a number of factors, including the BJP’s Hindu nationalist ideology, its crackdown on dissent, and its use of violence against religious minorities.” By contrast, as the social media post detailed, when similar questions were posed about former US President Donald Trump and Chinese leader Xi Jinping, there was reportedly no clear answer.

A Google spokesperson responded shortly after the incident to say: “We’ve worked quickly to address this issue. Gemini is built as a creativity and productivity tool and may not always be reliable, especially when it comes to responding to some prompts about current events, political topics, or evolving news. This is something that we’re constantly working on improving”.

The issue didn’t stop there. Reacting to the original social media post, Rajeev Chandrasekhar, Minister of State for Electronics and IT, tweeted that this was a direct violation of India’s intermediary IT rules and violated several provisions of the Criminal Code. The tweet was marked to Google AI, Google India and the Ministry of Electronics and IT (MeitY). Following Google’s clarification, he responded with another tweet stressing the same point and saying platforms were not exempt from the law.

What do the upcoming elections mean for citizens of India in a highly engaged virtual space? More than half of Indians are reportedly active internet users, a figure set to reach 900 million by 2025. The elections will be held from 19 April to 1 June in seven different phases and the results will be announced on 4 June.

The government has made it clear they see AI-generated deepfakes as a big problem. At the start of this election year, Minister Chandrasekhar said India had “woken up earlier” to the danger posed by deepfakes than other countries because of the size of its online population. He also warned that social media companies will be held accountable for any AI-generated “deepfakes'' posted on their platforms in compliance with “very clear and explicit rules.”

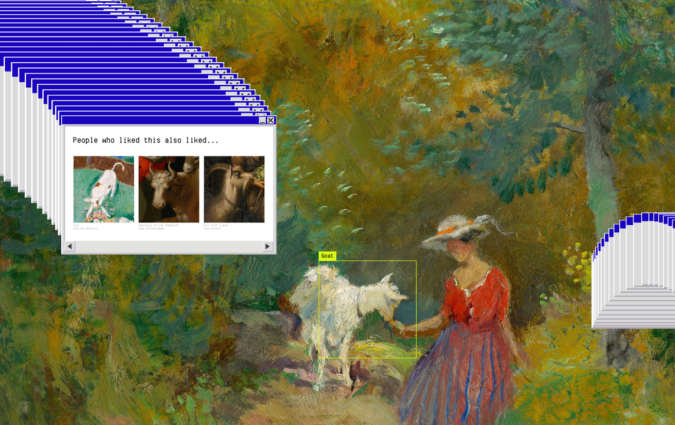

Ironically, political parties themselves have been using deepfakes and AI in aggressive pre-election campaigning, ranging from multi-lingual public addresses and personalised video messages to creating lifelike videos of deceased leaders. While not strictly nefarious in intent, AI deepfakes are a key part of India’s elections in 2024, a year in which this massive nation of more than 1.43 billion people will vote for the government they want to see for the next five years.

Against this crucial backdrop two challenges have remained clear for journalists in India. One is the view that India is a misinformation magnet and that deepfakes should now be treated as a stand-alone and more dangerous problem within that field. The other one is that the response to misinformation has been to introduce increasingly heavy-handed laws that threaten to curb freedom of speech and place onerous demands on journalists and platforms.

A country at risk

Ahead of its annual Davos Summit, the World Economic Forum released its Global Risks Report for 2024 analysing challenges countries will face in the coming decade. The report placed mis- and disinformation at the top of the list in terms of posing a risk – and India was ranked first as the country facing the highest risk in the world.

For many seasoned India fact-checkers, this came as no surprise.

In 2018 the BBC published a report based on extensive research in India, Kenya and Nigeria into the way ordinary citizens engage with and spread fake news. According to the report, “facts were less important to some than the emotional desire to bolster national identity. Social media analysis suggested that right-wing networks are much more organised than [those] on the left, pushing nationalistic fake stories further. There was also an overlap of fake news sources on Twitter and support networks of Prime Minister Narendra Modi.”

Research conducted in 2019 by our own Institute found that there were high levels of concern amongst respondents over hyper partisan content and the political context of disinformation, and evidence that some political groups have actively disseminated falsehoods.

Interestingly, when those heightened levels of concern and exposure were examined by political leaning, there were no partisan differences. Concerns over disinformation and false news were similar across all respondents regardless of the party they supported. In other words, people in India were worried about disinformation across political lines.

And yet the source of this endless stream of misinformation begs a deeper look.

In 2020, Alt News, an India-based fact-checking outfit, identified 16 pieces of misinformation allegedly shared by the BJP’s IT cell chief Amit Malviya. As the opposition party’s key leader Rahul Gandhi began his trans-India walk in 2023, fake news mushroomed alongside it, ranging from claims that the flag of India's neighbour and arch nemesis Pakistan was raised during the march, to posts claiming that the Congress Party workers distributed cash to attract attendees and even a video showing Gandhi enjoying a sexually explicit song. It was later found that the audio in this video was morphed, with the original song taken from a Bollywood movie.

In December 2023, the Washington Post published an investigative piece on the Disinfo Lab, an outfit set up and run by an Indian intelligence officer to allegedly research and discredit foreign critics of the Modi government.

More recently, reports suggest a large volume of the disinformation on social media around the Israel-Gaza conflict has been produced or spread by right-leaning accounts based out of India.

Is free speech being suppressed?

Two years after staging a massive protest near India’s capital Delhi, farmers resumed their demonstration in early 2024. However, this time round, journalists complained about how influential X accounts of reporters, influencers and prominent farm unionists covering the protests were being “suspended.” In response, X put out a clarification on their official handle @GlobalAffairs saying pages had indeed been taken down following executive orders from the Indian government.

The orders were “subject to potential penalties, including imprisonment,” X said in a statement, adding that it “disagreed with these actions.” While not an act of misinformation itself, it was clear the government was ‘filtering’ information available to the wider public about an important issue.

Gilles Verniers, political scientist at the Centre for Policy Research and Visiting Assistant Professor in Political Science at Amherst College, says it’s not a new development that misinformation and disinformation in India have been a key component of political strategy and political practice more generally.

Our own research indicates that Indians show high levels of concern over hyper partisan content and Verniers believes that India’s troubles with WhatsApp rumours have led to murders and lynching, so the issue is indeed very serious.

These instances indicate a lot of the misinformation is coming from within political parties themselves and is politically charged. Will AI make this problem more complex and more difficult to address?

AI – new beast or old beast?

Prateek Waghre, who heads the Internet Freedom Foundation in India, believes the question of whether AI or deepfakes should be treated as a separate category is secondary to the core issue: a lack of long-term measures to address the broad family of problems around non-consensual imagery.

Waghre says there are no clear grievance mechanisms for vulnerable groups that have been at the receiving end of revenge porn or morphed imagery. Neither does that exist in the political disinformation context, where he believes not nearly enough has been done to understand the news diets of Indians and how they are (or aren’t) affected by a steady supply of partisan information, hate speeches or false material.

Sam Gregory, an award-winning human rights advocate and technologist, is the Programme Director at WITNESS, an international non-profit organisation. He believes that India faces several challenges, amongst them an absence of platform capacity.

This concern is often echoed by others.In August last year, media nonprofit Internews released a report saying Facebook parent Meta was “neglecting” an initiative designed to remove harmful content and misinformation online leaving it “under-resourced and understaffed”, leading to “operational failures.” Gregory says there’s also a lack of consumer tools to support media literacy, and a governmental curtailing of platform activity to mitigate misinformation in an even-handed way.

Gregory points to the broad use of messaging apps as part of the problem. At last count, India had a staggering 400 million WhatsApp users, the largest user base in the world. With these kinds of messaging apps, Gregory says, it’s hard to find context on the kind of content media users consume in a space where formats like audio and video can easily be shared. A contentious political environment adds to these challenges and makes them more difficult to address.

Gregory believes AI has made this an even more complex space to understand. The risk with AI is that content can now appear to be specific – real people saying or doing things they never did – in more convincing ways than ever before.

The other critical concern for Gregory is the ability of AI to claim that reality is faked, as well as to fake reality, all of which undermines people's confidence in information more generally. There isn’t sufficient empirical evidence of this yet. But he believes the challenges in identifying AI content compound this risk.

Fact-checking institutions like WITNESS are already seeing many examples of specific incidents where people try to play the reverse card – use the existence of AI to dismiss real footage.

Gregory shares an incident from July 2023 as a case in point. K. Annamalai, a politician from Modi’s ruling BJP party released two audio clips of an opposition leader P Thiagarajan, a minister in Tamil Nadu state government who was part of a different party, the DMK. In the audio clips, Thiagarajan was allegedly accusing his own party members of corruption and praising his opponent. He resolutely denied this and maintained the audio clips were fabricated using AI.

Rest of World asked three independent deepfake experts to analyse the authenticity of the two clips, including Gregory’s Deepfakes Rapid Response Force for forensic analysis. The analysis concluded that the first clip may have been tampered with, but stressed that the second clip was authentic.

Are AI deepfakes having any impact?

Professor Verniers stresses that social scientists are not yet measuring the real impact of AI deepfakes on this year’s election campaign. While there is some anecdotal evidence of this new tech being used in innocuous forms like reviving dead politicians to make contemporary speeches, it is not clear yet how technology can be used or abused for political gains.

Verniers also highlights something perhaps unique about India: how traditional forms of ethnic and religious nationalism are greatly enhanced by technology. A good example is the inauguration of the Ram Temple (in Ayodhya), for which engineers built a virtual temple on Meta. With the right equipment – in this case, A VR headset – you could experience and explore that religious space.

But Verniers is also clear that technology is not yet a substitute for conventional forms of campaigning such as boots on the ground, door to door campaigning, and mobilising voters locally. This is why the ruling BJP has considerable competitive advantage, he says, because of the sheer number of people willing to volunteer for its campaign.

The numbers are staggering. During our recent seminar, Verniers said that they had nearly 900,000 volunteers on the ground in 2019. They did day to day campaigning, including a last minute push to encourage people to step out and vote. Social media and the BJP’s communication strategy, according to Verniers, is an efficient way for the party to saturate the public sphere with a defined image of Modi and his party. But it is just one means amongst others.

Hard cases make bad law

Sharp political and religious overtones, manipulation of reality and an erosion of confidence amongst users are all valid concerns. But the biggest risk for India could come from damaging laws, rather than dangerous tech. In response to Gemini’s notes on Prime Minister Modi, the IT Minister quickly pointed to Rule 3(1)(b) of Intermediary Rules (IT rules) of the IT Act. What was he referring to?

In April 2023, the Indian government promulgated certain amendments to the existing Information Technology rules. Through these amendments, tech platforms were now obligated to inform and restrict users to not “host, display, upload, modify, publish, transmit, store, update or share any information” which is “identified as fake or false or misleading by [a] fact-check unit of the Central Government” in respect of “any business of” the Indian government.

Waghre, the expert from the Internet Freedom Foundation, has been a vocal critic of the amendments suggested in the IT Rules. He explains his concerns arise from the unconstitutional nature of these suggestions. For example, the manner of notification – in a form vastly different from rules that were put out for public consultation in December 2018.

The amendments, believes Waghre, are tantamount to an apparent move to exercise control over media publications, introducing preconditions to make any publication/content traceable. Subsequent amendments have presented the concept of government-appointed grievance committees for content moderation. In addition, there are obligations for tech platforms to act in accordance with actions taken by a government-appointed fact-checking unit.

Two particular points in these amendments on the IT Rules stand out, explains Waghre. Changing the obligation on platforms from ‘informing’ users around content to specifically requiring them to take reasonable efforts to ‘restrict’ users from doing so is both vague and wide. There’s been no clarification on what would qualify as efforts despite the issue being raised in consultations.

Waghre also points to the introduction of the phrase “knowingly and intentionally communicates any misinformation or information.” This is problematic, he says, because neither had misinformation been defined nor had the criteria for determining intent been specified. In fact, the phrase betrays an inherent contradiction since the term ‘misinformation’ itself generally implies a lack of intent.

The net result of these amendments is subjective interpretation, selective enforcement, and a deeply problematic institutional design. Most importantly, the key concern for the Internet Freedom Foundation is the framing of broad rules that give the government discretion without any parallel efforts to understand how these rules work or affect Indian citizens.

While some of these are just suggested changes and not new laws yet, Waghre says the ever-expanding interpretation of what the parent IT Act of 2000 actually detailed is disturbing. Recent notices from the government – including public rebukes in tweets such as the one to Google on Gemini – may have served as a reminder to platforms of these obligations.

This incident could also have played as a catalyst. Following the fracas over Google and Gemini, India’s IT minister said all AI models, large-language models (LLMs), software using generative AI or any algorithms being tested must seek “explicit permission of the government of India” before being deployed for users on the Indian internet, a decision that set off alarm bells amongst AI start-ups and experts.

Since then, Google moved quickly. Only a few weeks after the incident, the tech company said that “out of an abundance of caution on such an important topic” it was restricting the types of election-related questions users can ask its AI chatbot Gemini, a policy that would be rolled out in India, which is scheduled to hold elections in April 2024.

Where do we go from here?

Where does this leave news organisations navigating the dizzy pace of election reporting, a haze of misinformation, an ever changing AI landscape and the threat of potentially damaging IT Rules amendments hanging above their heads?

On detecting AI-generated deepfakes itself, Sam Gregory from WITNESS says newsrooms are clearly not ready for this kind of challenge. The starting point would be to be prepared for the existing problem of deceptive content itself. Many journalists, fears Gregory, still lack basic OSINT skills and verification skills that are key to both debunking a shallow fake and assessing a potential deepfake.

Gregory admits there are technical and practical challenges to doing more. Deepfake detection is always likely to be flawed because it's often specific to the way a particular type of manipulation has been created.

AI detection tools can provide a signal at best, but in general approaches structured around spotting the glitches in AI-generated audio, images and video are not a reliable long-term way to spot deepfakes. Any tip is rapidly outdated by new tech. However, verification skills, he believes, can still be useful for journalists as long as they are aware that most of these clues are only applicable at a moment in time.

On the law, and the frequent amendments to them, the Editors Guild of India wrote in 2023: “At the outset, determination of fake news cannot be in the sole hands of the government and will result in the censorship of the press. This new procedure basically serves to make it easier to muzzle the free press, and will give sweeping powers to the PIB (Press Information Bureau), or any ‘other agency authorised by the central government for fact-checking’ to force online intermediaries to take down content that the government may find problematic.”

On all counts – overreaching laws around free speech, disinformation and the insidious use of AI technology – it is ultimately the citizens of India who will pay a heavy price.

If you want to know more:

In every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s of sources - all in 5 minutes.

- Twice a week

- More than 20,000 people receive it

- Unsubscribe any time