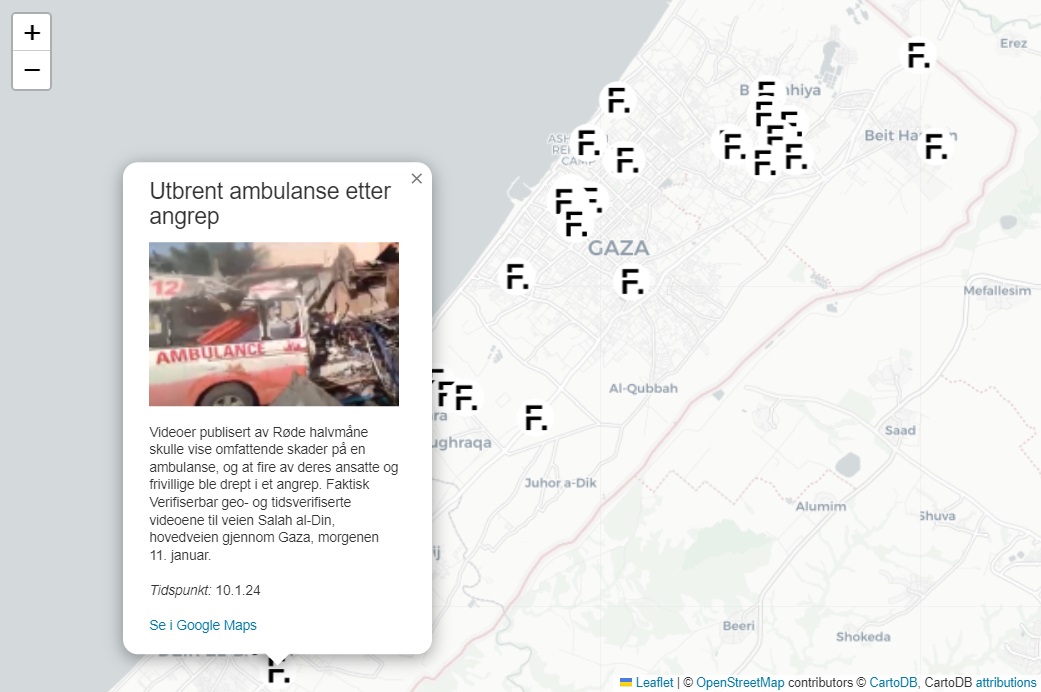

A screengrab of Faktisk Verifiserbar's map made assisted by AI which verifies images and videos showing attacks on hospitals and schools in the Middle East.

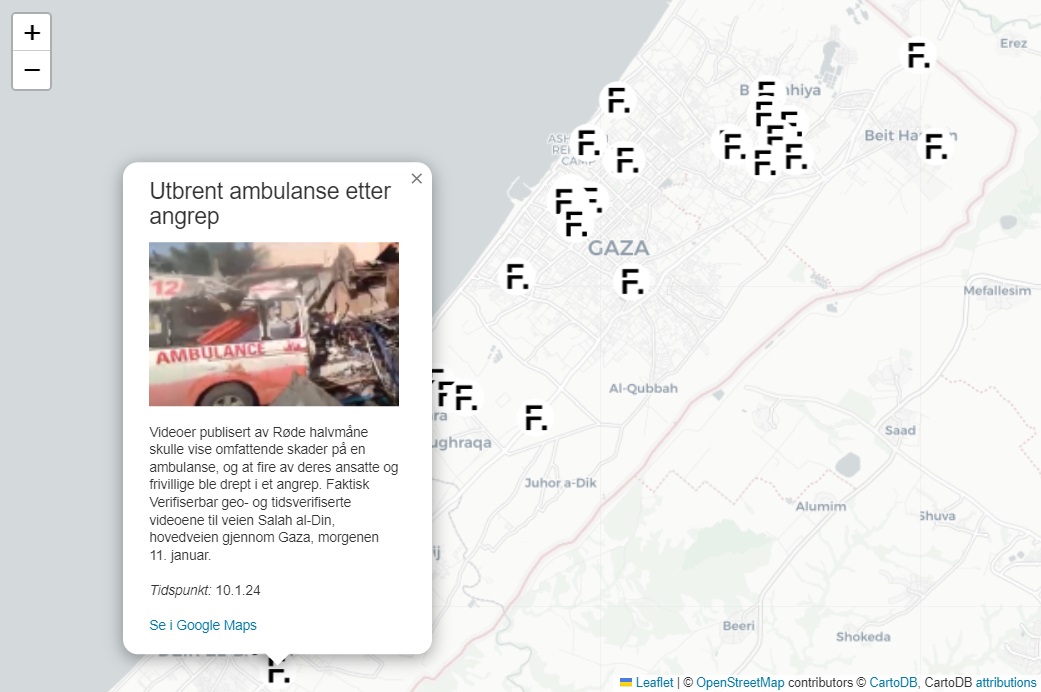

A screengrab of Faktisk Verifiserbar's map made assisted by AI which verifies images and videos showing attacks on hospitals and schools in the Middle East.

In a year where more than 50 countries are holding elections, bad actors are ramping up their disinformation campaigns with fake images, fake videos and fake audios created with the use of generative artificial intelligence. But just as AI is revolutionising the spread of falsehoods, could it also help debunk them?

To answer that question, I spoke to three fact-checkers from Norway, Georgia, and Ghana who are applying artificial intelligence to their work. But our conversations soon turned to the limitations of this new technology when applied to diverse geographical contexts, for example in non-Western countries or in countries with underrepresented languages in training models.

The dawn of generative AI has made many fact-checking organisations embrace this technology in different ways, with the promise of accuracy and speed.

Faktisk Verifiserbar is a Norwegian cooperative fact-checking organisation that focuses mostly on fact-checking conflict areas using OSINT techniques. They have been experimenting with different aspects of AI to facilitate and automate their work. Henrik Brattli Vold, a senior adviser for the organisation, explained they have been using an AI photo geolocation platform called GeoSpy, which extracts “unique features from photos and matches those features against geographic regions, countries and cities.”

They have also worked with AI researchers from the University of Bergen to develop tools that can make their work more efficient. One of them is a tool called Tank Classifier, which identifies and classifies a tank or an artillery vehicle present in a picture a user posts. Another one is a language detection tool to check what language is being spoken in a given video or audio file.

Faktisk Verifiserbar has used ChatGPT to help them visualise their OSINT investigations. Here’s how Brattli Vold explains it: “We used our database, which is a huge Google Sheet, and we structured it with ChatGPT in a way that we could write a precise prompt that would give us an embeddable map output every time we ask. We worked quite a bit with structured prompting, with the data interpreter function in ChatGPT just to be able to do that.” The methodology involves asking the tool to visualise geo coordinates in code and produce a downloadable HTML OpenStreetMap file which they refine and redesign. The result is a map which includes all the images and videos they have fact-checked throughout the ongoing war in Gaza.

“The time spent from idea to prototype is significantly shorter when using ChatGPT,” Brattli Vold says. “We were able to play around with our columns and the functionality we wanted almost in real time. ChatGPT would respond well to increasing complexity, so we were able to lock in the features we wanted while still experimenting with other parts of the code.”

At Faktisk Verifiserbar they are developing a dashboard with the help from AI researchers at Norwegian universities that will further embed AI within their newsroom. This dashboard will have three AI components and will allow them to do three things: drag and drop source videos and pick a key frame that would make it ready for a reverse image search; text-scan and do a search based on the text that's appearing on key frames; and identify objects in a video and tell how far away they are from each other.

While this dashboard is still in development, Brattli Vold says that the need to work with AI comes from the growth of dis- and misinformation. “For us to make a credible and fully transparent fact-check, it can take from from half-an-hour to three days and a lot of times, when newsrooms need help, they need help now, not in three days,” he says.

The team at MythDetector, a fact-checking platform in Georgia, has also begun experimenting with generative AI. Editor-in-Chief Tamar Kintsurashvili says that artificial intelligence has been helping them detect harmful information that’s spreading on digital platforms through a matching mechanism where they label some content as false and AI is able to find similar false content online.

“When we label some content, then AI finds similar content,” she explains. “Hostile actors have learned how to avoid social networks’ policies so sometimes coordination is achieved not through distribution of content through Facebook pages, but through individuals mobilised by political parties or far-right groups. Since we have access to only the public pages and groups, it's not always easy to identify individuals through social listening tools, such as CrowdTangle.”

It is worth pointing out that Meta announced that it will be shutting down CrowdTangle by August 2024, months before the Georgian election in October. The company said it is replacing the tool with a new Content Library API.

While AI helps them flag content, Kintsurashvili says, they still have to go through it themselves because there’s some mismatching as this tool hasn’t been trained in the Georgian language. “It's saving us time when tools are working properly, but some tools are still in testing mode, and AI fact-checking tools are not very efficient yet, but I’m sure they will improve their efficiency in the future,” she says.

Kintsurashvili tells me that they can’t share data coming from Facebook Dashboard where they see these mismatches the most. What she says is that AI can successfully match a personality (for instance, former Georgian PM Bidzina Ivanishvili) or a media outlet (like Sputnik) mentioned in a post, but not relevant thematic posts.

While a number of prominent fact-checking organisations, like FullFact in the UK and Maldita and Newtral in Spain, have already been implementing AI tools to facilitate their work, outlets from other countries have been much more cautious. In smaller markets or markets with minority languages, newsrooms often question whether AI tools are accurate enough to help in their fact-checking efforts.

Rabiu Alhassan is the founder and Managing Editor of GhanaFact, a news fact-checking and verification platform in Ghana. While his organisation is currently developing some guidelines on the use of AI as a tool, he says they are very cautious in terms of applying it to their work and they won’t use it to help with any actual fact-checks.

“We like to think that our understanding of the nuances in the different countries in the sub-region should help us in building tools that are more tailored in tackling the problem of misinformation and disinformation,” he says. “Looking at the way AI is being deployed, we feel it will not do enough justice in either producing content or providing the nuances expected when doing fact-checks. These limitations come from the way these machine learning tools are built.”

Myth Detector’s head of fact-checking Maiko Ratiani tells me, for example, that a year ago she remembers one case when ChatGPT in the Georgian language had a number of controversial answers regarding politics in the country.

GhanaFact’s Alhassan highlights that many of these AI tools that are pouring into the mainstream are built and trained under a Western perspective, focusing on content coming from Europe or North America.

For example, Rest of World tested ChatGPT-3.5’s ability to respond in languages like Bengali, Swahili, Urdu and Thai and found many problems in the kind of information the chatbot provided. Another Rest of World analysis showed how image-based generative AI systems have “tendencies toward bias, stereotypes, and reductionism when it comes to national identities.”

Researchers have emphasised these linguistic anomalies and digital technology experts such as Dr. Scott Timcke, Chinmayi Arun, and Jamila Venturini have cautioned against the development of AI with no regard to local contexts in the Global South.

The main language spoken in Ghana is English, but Alhassan points out that there are over 100 local languages which pose an additional linguistic challenge. In countries where these kinds of underrepresented languages are spoken, fact-checkers are less likely to be able to benefit from the fruits of this emerging technology.

MythDetector’s Kintsurashvili shares similar concerns. Even though her team has been successfully rolling out AI tools in their verification process, she says that AI companies should do much more to improve the deep learning process in markets like Georgia, whose language is not dominant online.

“Small language models have limitations in terms of detection of harmful content,” she says. “Due to limited reach, these smaller languages are not a priority for big tech companies. In the case of Georgia, in addition to the state language, we have minority settlements, and Armenian and Azerbaijani languages should also be considered, which makes the work of fact-checkers more complicated.”

For Brattli Vold the fact that Norway is such a small language area has also taught them that any new services will not prioritise their language. “An exception is ChatGPT, which is a terrific translator for us. We can prompt in our own language, and ChatGPT will understand the context most of the time,” he says. The tool is not perfect though: “There are some idiosyncrasies every now and then, when it will reply to us in Danish, for instance, or it will get confused with Norwegian context.”

For now, however, ChatGPT does have its limitations in Norway. Brattli Vold, whose team uses the tool for coding tasks, says they found that coding after 2pm could be headache-inducing as ChatGPT began to perform poorly. Their theory is that the times coincide when Americans start their working day, meaning that the capacity is perhaps spread thinner. “Also, since this tool tends to hallucinate when providing content, we had to manually and painstakingly go through every geopoint to verify that the information is correct,” he says.

As Ghana gears up for a general election in December 2024, Alhassan and his team are working tirelessly to debunk misinformation. As they work on this, they’ve noticed AI-generated content and troll farms penetrating conversations online and driving electoral narratives. But there is only so much fact-checkers can do when platforms neglect to put resources into smaller markets.

The fight against misinformation is somewhat up-in-the-air in Africa as content moderators and data taggers working for Meta, ChatGPT and TikTok are going to court to demand better pay and working conditions.

In 2023 more than 150 of those content moderators also established the African Content Moderators Union to add on to the efforts. Membership currently stands at 400, according to organisers. According to a report by Jacobin, Foxglove Legal, a UK-based tech justice nonprofit, says that Meta has responded to these complaints by discreetly contracting its moderation to another company.

Alhassan thinks big tech companies need to take responsibility and improve the quality of the content circulating on their platforms, particularly in the African context and in other non-Western markets. “There are some markets where [Big Tech] doesn’t generate funds and where they do not pay attention, but that does not take away how problematic the platforms are in those countries,” he says.

Alhassan takes his argument even further: “If more established democracies in the West are experiencing problems, and these are problems that are shaking up their democracies, then what can you say of very young democracies that have lots of existing fault lines that could easily be triggered using some of these platforms?”

Documents leaked from Facebook revealed that in India, arguably Meta’s largest market with over 340 million users across its platforms, mis- and disinformation were rampant as bots and fake accounts tied to the country’s ruling party and opposition figures attempted to sway the 2019 elections.

The documents also showed that Facebook did not have enough resources in the country and it was unable to grapple with the tsunami of false content. The same leaked documents also revealed that 87% of the company’s global budget for time spent on classifying misinformation was allocated to the United States, while only 13% was set aside for the rest of the world.

If social media platforms are unable to address regional and national contexts, can we trust AI platforms to do so?

Faktisk Verifiserbar’s Brattli Vold, who is most optimistic about the possibilities AI can bring to their work, says a good solution could be to implement their national GPT(s) into larger services. “I can't see us Norwegians using solely a national LLM (like NorLLM or NorGPT),” he explains. “They are much too small to be of great standalone value, but they are hugely important for our small language and cultural region, and I think these will be implemented in other tools going forward.”

Similarly, MythDetector’s Kintsurashvili from Georgia, who is also preparing for an election this year, thinks that tech companies and academic institutions should invest more in language learning modules and cooperate with other stakeholders to achieve the common goal of diversifying large language models (LLMs).

“Some universities and local organisations are working to make the Georgian language more flexible in the digital world,” she says. “I hope this language learning process with the small languages will be successful. It requires some time and resources not only from fact-checkers, but also from other organisations, from researchers, from universities, to be invested in making Georgian language more understandable in the digital world.”

This would entail working in natural language processing (NLPs) which is a branch of artificial intelligence that focuses on enabling computers to understand, interpret, and generate human language in a way that is both meaningful and useful. Currently, for example, Ilia State University in Georgia is working with other EU partners to foster language diversity in NLPs.

For Brattli Vold part of the solution also goes beyond leaving their fate at the hands of platforms. From its inception, Faktisk Verifiserbar is an organisation founded on collaboration, as it arose from a cooperative effort between the major news media in Norway to train journalists on fact-checking and OSINT techniques and bring those skills back to their respective newsrooms.

It is this ethos that is motivating Faktisk Verifiserbar to make all these AI tools which are open-source and uploaded to GitHub for anyone to fork and keep working on them.

“For the smaller news areas, I would say it's so important to work together against disinformation, and I'm hoping that smaller newsrooms and newsrooms from smaller-language areas can cooperate more on this kind of methodology,” Brattli Vold says. “We shouldn’t compete on stories. We should cooperate on methodology as we all need to fight disinformation. That's our major enemy, not the newsroom next door.

At every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s sources - all in 5 minutes.

At every email we send you'll find original reporting, evidence-based insights, online seminars and readings curated from 100s sources - all in 5 minutes.